I recently started playing with DALL-E 2, which will attempt to generate an image to go with whatever text prompt you give it. Like its predecessor DALL-E, it uses CLIP, which OpenAI trained on a huge collection of internet images and nearby text. I’ve experimented with a few methods based on CLIP, but DALL-E generates particularly clear, coherent images.

So of course I decided to use it to mess up corporate logos.

Literally all I had to do was ask DALL-E to generate “The local Waffle House”. (as opposed to the local haunted waffle house, which is also a thing I’ve used AI to generate) Or, below, “The Pizza Hut logo”.

Or the Jeep logo.

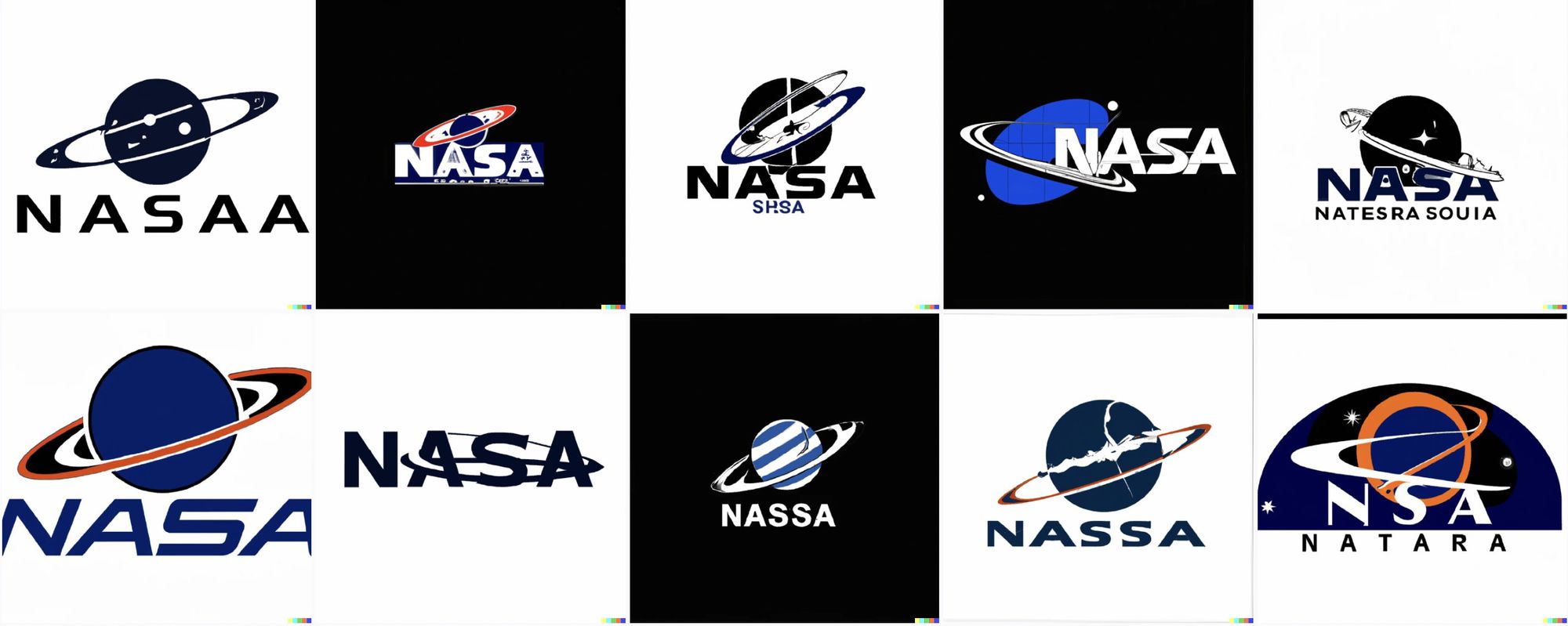

It gets some of these correct enough that it MUST have gotten them from information online. Like, it knew that the NASA logo involves an orb with a partial ring. But it has transformed that into a full-on Saturn which, admittedly, is pretty cool-looking.

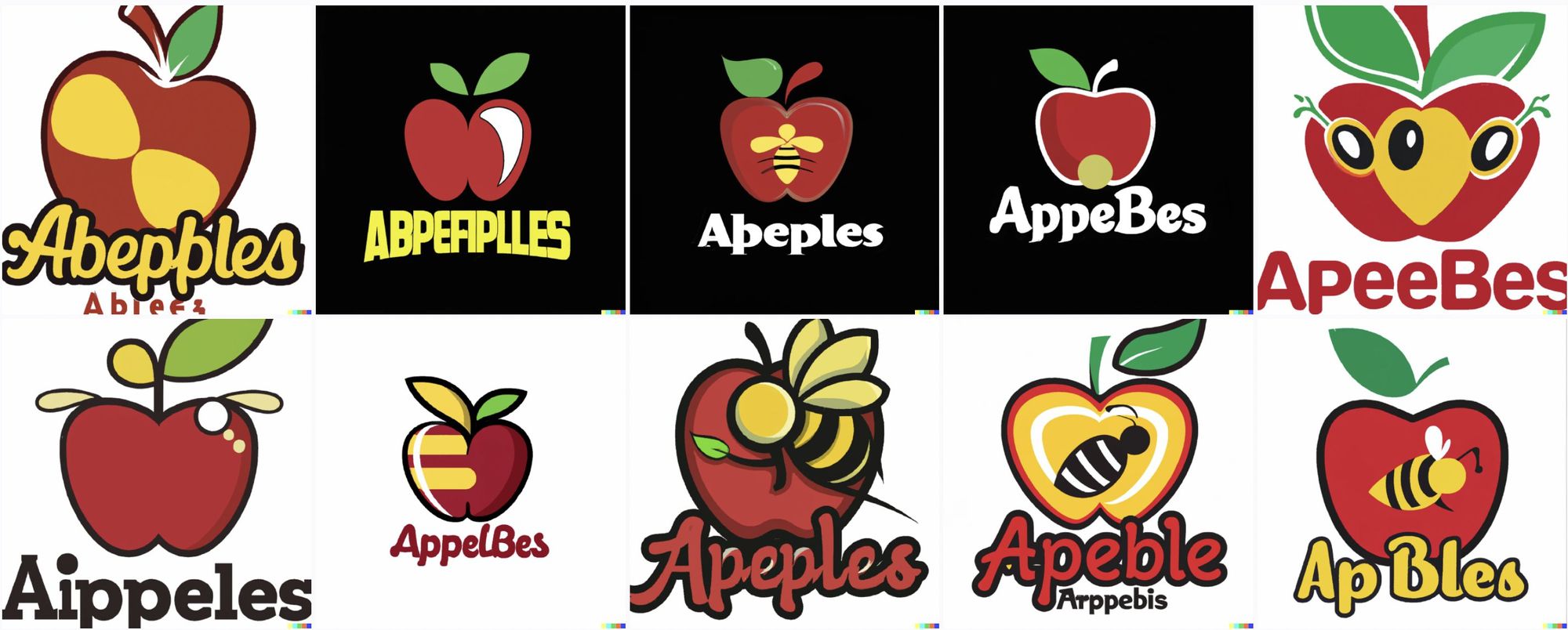

Or in the case of Applebees it seems to have decided that the logo would be better if it contained actual bees.

It seems to have picked up on the general shape of the Snickers logo. But apparently decided sometimes to add sneakers as well.

And it’s clear that the Burger King logo definitely needs a crown on the burger.

I’d be lying if I said the spelling wasn’t hilarious. The spelling is pretty hilarious.

Even for a simple sign like “Arbys”, it somehow manages to get the middle letters wrong 10 out of 10 tries.

This one might be my favorite.

It has more trouble with longer text, such as its near-unrecognizable renditions of Tim Hortons.

It also apparently has trouble with vertical text, like on the original cans of Irn Bru.

But note that In-N-Out, a California fast food brand, has palm trees and sunny skies in the background, whereas many of the Tim Hortons signs have grey skies. There’s information being used on many levels, to get the shading right and the lettering consistent.

Just not the spelling.

Bonus content: More brands, including an unexpected photorealistic goat-turtle.