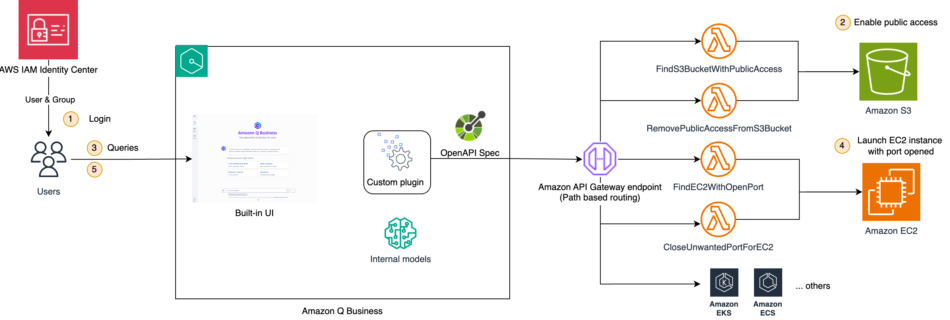

Building an AIOps chatbot with Amazon Q Business custom plugins

Many organizations rely on multiple third-party applications and services for different aspects of their operations, such as scheduling, HR management, financial data, customer relationship management (CRM) systems, and more. However, these systems often exist in silos, requiring users to manually navigate different interfaces, switch between environments, and perform repetitive tasks, which can be time-consuming and …

Read more “Building an AIOps chatbot with Amazon Q Business custom plugins”