Super charge your LLMs with RAG at scale using AWS Glue for Apache Spark

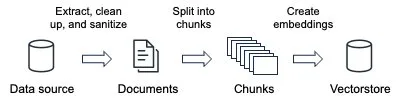

Large language models (LLMs) are very large deep-learning models that are pre-trained on vast amounts of data. LLMs are incredibly flexible. One model can perform completely different tasks such as answering questions, summarizing documents, translating languages, and completing sentences. LLMs have the potential to revolutionize content creation and the way people use search engines and …

Read more “Super charge your LLMs with RAG at scale using AWS Glue for Apache Spark”