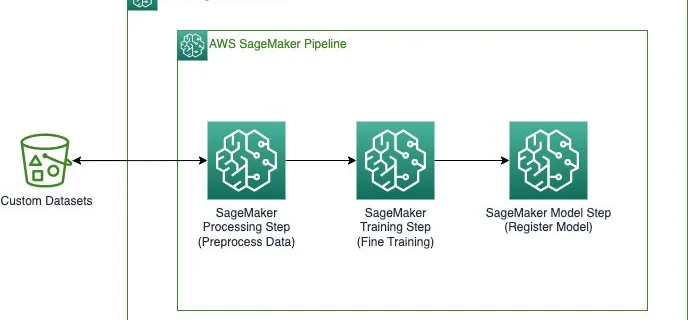

Implementing MLOps practices with Amazon SageMaker JumpStart pre-trained models

Amazon SageMaker JumpStart is the machine learning (ML) hub of SageMaker that offers over 350 built-in algorithms, pre-trained models, and pre-built solution templates to help you get started with ML fast. JumpStart provides one-click access to a wide variety of pre-trained models for common ML tasks such as object detection, text classification, summarization, text generation …

Read more “Implementing MLOps practices with Amazon SageMaker JumpStart pre-trained models”