From LLMs to image generation: Accelerate inference workloads with AI Hypercomputer

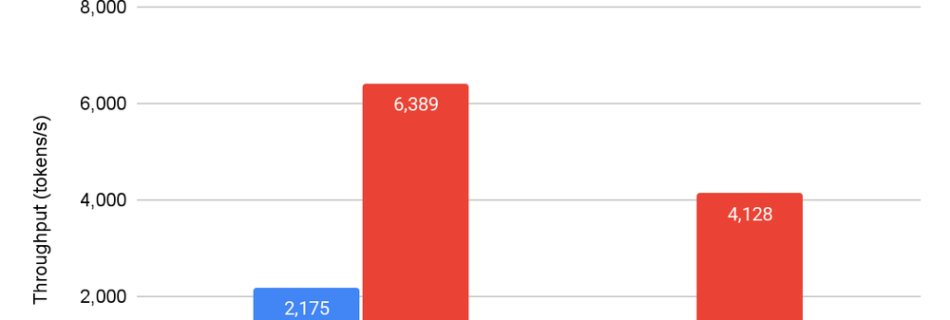

From retail to gaming, from code generation to customer care, an increasing number of organizations are running LLM-based applications, with 78% of organizations in development or production today. As the number of generative AI applications and volume of users scale, the need for performant, scalable, and easy to use inference technologies is critical. At Google …

Read more “From LLMs to image generation: Accelerate inference workloads with AI Hypercomputer”