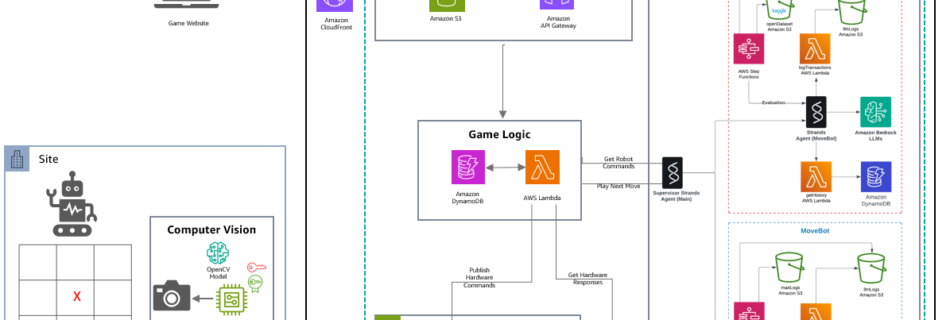

Bringing tic-tac-toe to life with AWS AI services

Large language models (LLMs) now support a wide range of use cases, from content summarization to the ability to reason about complex tasks. One exciting new topic is taking generative AI to the physical world by applying it to robotics and physical hardware. Inspired by this, we developed a game for the AWS re:Invent 2024 …

Read more “Bringing tic-tac-toe to life with AWS AI services”