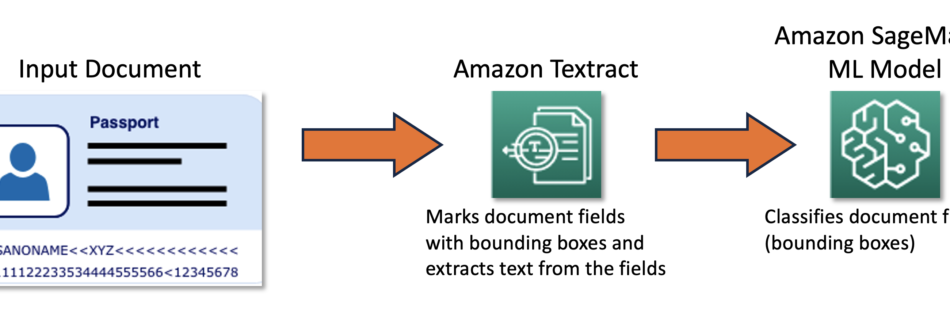

Innovation for Inclusion: Hack.The.Bias with Amazon SageMaker

This post was co-authored with Daniele Chiappalupi, participant of the AWS student Hackathon team at ETH Zürich. Everyone can easily get started with machine learning (ML) using Amazon SageMaker JumpStart. In this post, we show you how a university Hackathon team used SageMaker JumpStart to quickly build an application that helps users identify and remove …

Read more “Innovation for Inclusion: Hack.The.Bias with Amazon SageMaker”