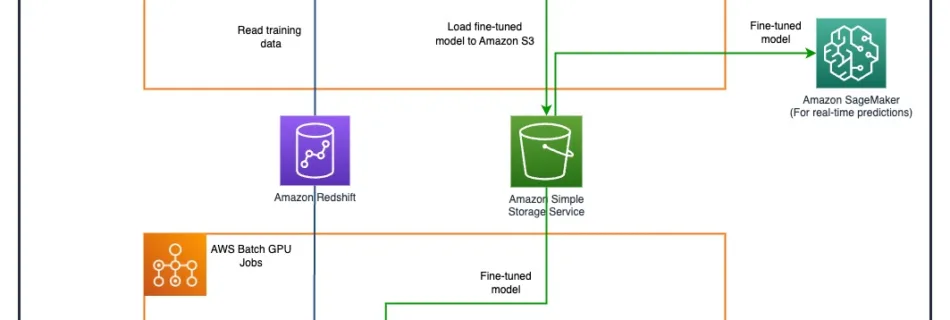

Enable faster training with Amazon SageMaker data parallel library

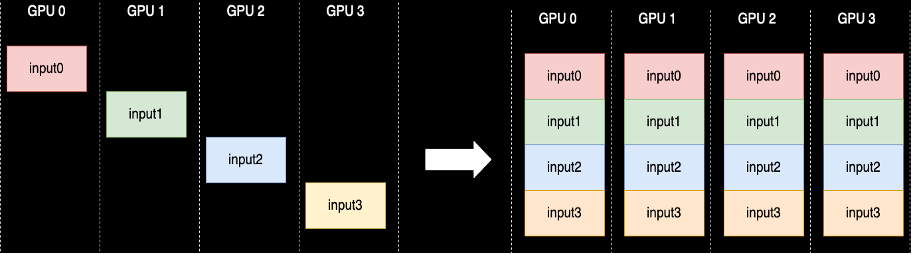

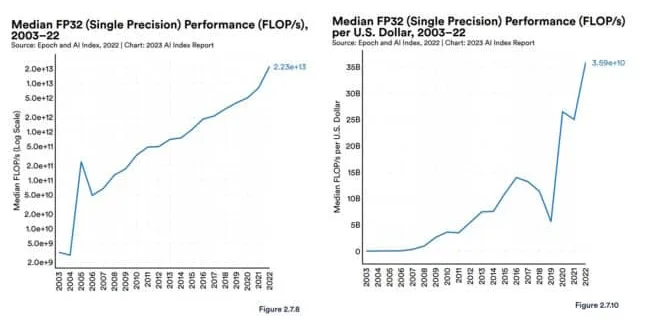

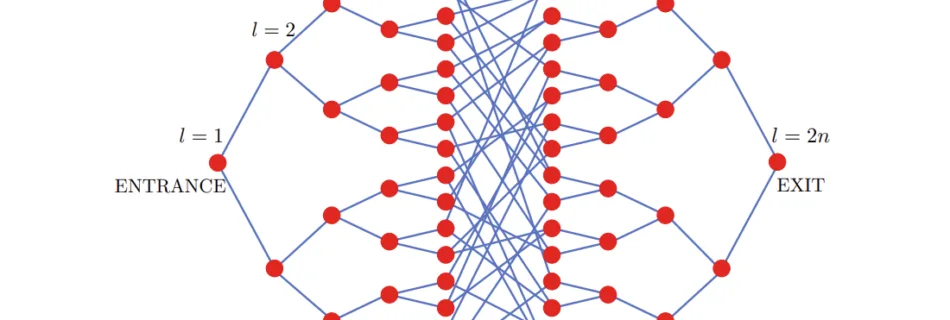

Large language model (LLM) training has become increasingly popular over the last year with the release of several publicly available models such as Llama2, Falcon, and StarCoder. Customers are now training LLMs of unprecedented size ranging from 1 billion to over 175 billion parameters. Training these LLMs requires significant compute resources and time as hundreds …

Read more “Enable faster training with Amazon SageMaker data parallel library”