In natural conversations, we don’t say people’s names every time we speak to each other. Instead, we rely on contextual signaling mechanisms to initiate conversations, and eye contact is often all it takes. Google Assistant, now available in more than 95 countries and over 29 languages, has primarily relied on a hotword mechanism (“Hey Google” or “OK Google”) to help more than 700 million people every month get things done across Assistant devices. As virtual assistants become an integral part of our everyday lives, we’re developing ways to initiate conversations more naturally.

At Google I/O 2022, we announced Look and Talk, a major development in our journey to create natural and intuitive ways to interact with Google Assistant-powered home devices. This is the first multimodal, on-device Assistant feature that simultaneously analyzes audio, video, and text to determine when you are speaking to your Nest Hub Max. Using eight machine learning models together, the algorithm can differentiate intentional interactions from passing glances in order to accurately identify a user’s intent to engage with Assistant. Once within 5ft of the device, the user may simply look at the screen and talk to start interacting with the Assistant.

We developed Look and Talk in alignment with our AI Principles. It meets our strict audio and video processing requirements, and like our other camera sensing features, video never leaves the device. You can always stop, review and delete your Assistant activity at myactivity.google.com. These added layers of protection enable Look and Talk to work just for those who turn it on, while keeping your data safe.

Modeling Challenges

The journey of this feature began as a technical prototype built on top of models developed for academic research. Deployment at scale, however, required solving real-world challenges unique to this feature. It had to:

- Support a range of demographic characteristics (e.g., age, skin tones).

- Adapt to the ambient diversity of the real world, including challenging lighting (e.g., backlighting, shadow patterns) and acoustic conditions (e.g., reverberation, background noise).

- Deal with unusual camera perspectives, since smart displays are commonly used as countertop devices and look up at the user(s), unlike the frontal faces typically used in research datasets to train models.

- Run in real-time to ensure timely responses while processing video on-device.

The evolution of the algorithm involved experiments with approaches ranging from domain adaptation and personalization to domain-specific dataset development, field-testing and feedback, and repeated tuning of the overall algorithm.

Technology Overview

A Look and Talk interaction has three phases. In the first phase, Assistant uses visual signals to detect when a user is demonstrating an intent to engage with it and then “wakes up” to listen to their utterance. The second phase is designed to further validate and understand the user’s intent using visual and acoustic signals. Look and Talk considers all signals in the first and second processing phases to determine if the interaction is likely intended for Assistant. These two phases are the core Look and Talk functionality, and are discussed below. The third phase of query fulfillment is typical query flow, and is beyond the scope of this blog.

Phase One: Engaging with Assistant

The first phase of Look and Talk is designed to assess whether an enrolled user is intentionally engaging with Assistant. Look and Talk uses face detection to identify the user’s presence, filters for proximity using the detected face box size to infer distance, and then uses the existing Face Match system to determine whether they are enrolled Look and Talk users.

For an enrolled user within range, an custom eye gaze model determines whether they are looking at the device. This model estimates both the gaze angle and a binary gaze-on-camera confidence from image frames using a multi-tower convolutional neural network architecture, with one tower processing the whole face and another processing patches around the eyes. Since the device screen covers a region underneath the camera that would be natural for a user to look at, we map the gaze angle and binary gaze-on-camera prediction to the device screen area. To ensure that the final prediction is resilient to spurious individual predictions and involuntary eye blinks and saccades, we apply a smoothing function to the individual frame-based predictions to remove spurious individual predictions.

|

| Eye-gaze prediction and post-processing overview. |

We enforce stricter attention requirements before informing users that the system is ready for interaction to minimize false triggers, e.g., when a passing user briefly glances at the device. Once the user looking at the device starts speaking, we relax the attention requirement, allowing the user to naturally shift their gaze.

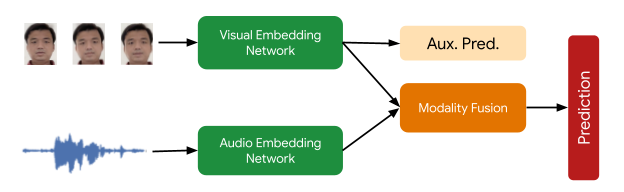

The final signal necessary in this processing phase checks that the Face Matched user is the active speaker. This is provided by a multimodal active speaker detection model that takes as input both video of the user’s face and the audio containing speech, and predicts whether they are speaking. A number of augmentation techniques (including RandAugment, SpecAugment, and augmenting with AudioSet sounds) helps improve prediction quality for the in-home domain, boosting end-feature performance by over 10%.The final deployed model is a quantized, hardware-accelerated TFLite model, which uses five frames of context for the visual input and 0.5 seconds for the audio input.

Phase Two: Assistant Starts Listening

In phase two, the system starts listening to the content of the user’s query, still entirely on-device, to further assess whether the interaction is intended for Assistant using additional signals. First, Look and Talk uses Voice Match to further ensure that the speaker is enrolled and matches the earlier Face Match signal. Then, it runs a state-of-the-art automatic speech recognition model on-device to transcribe the utterance.

The next critical processing step is the intent understanding algorithm, which predicts whether the user’s utterance was intended to be an Assistant query. This has two parts: 1) a model that analyzes the non-lexical information in the audio (i.e., pitch, speed, hesitation sounds) to determine whether the utterance sounds like an Assistant query, and 2) a text analysis model that determines whether the transcript is an Assistant request. Together, these filter out queries not intended for Assistant. It also uses contextual visual signals to determine the likelihood that the interaction was intended for Assistant.

|

| Overview of the semantic filtering approach to determine if a user utterance is a query intended for the Assistant. |

Finally, when the intent understanding model determines that the user utterance was likely meant for Assistant, Look and Talk moves into the fulfillment phase where it communicates with the Assistant server to obtain a response to the user’s intent and query text.

Performance, Personalization and UX

Each model that supports Look and Talk was evaluated and improved in isolation and then tested in the end-to-end Look and Talk system. The huge variety of ambient conditions in which Look and Talk operates necessitates the introduction of personalization parameters for algorithm robustness. By using signals obtained during the user’s hotword-based interactions, the system personalizes parameters to individual users to deliver improvements over the generalized global model. This personalization also runs entirely on-device.

Without a predefined hotword as a proxy for user intent, latency was a significant concern for Look and Talk. Often, a strong enough interaction signal does not occur until well after the user has started speaking, which can add hundreds of milliseconds of latency, and existing models for intent understanding add to this since they require complete, not partial, queries. To bridge this gap, Look and Talk completely forgoes streaming audio to the server, with transcription and intent understanding being on-device. The intent understanding models can work off of partial utterances. This results in an end-to-end latency comparable with current hotword-based systems.

The UI experience is based on user research to provide well-balanced visual feedback with high learnability. This is illustrated in the figure below.

|

| Left: The spatial interaction diagram of a user engaging with Look and Talk. Right: The User Interface (UI) experience. |

We developed a diverse video dataset with over 3,000 participants to test the feature across demographic subgroups. Modeling improvements driven by diversity in our training data improved performance for all subgroups.

Conclusion

Look and Talk represents a significant step toward making user engagement with Google Assistant as natural as possible. While this is a key milestone in our journey, we hope this will be the first of many improvements to our interaction paradigms that will continue to reimagine the Google Assistant experience responsibly. Our goal is to make getting help feel natural and easy, ultimately saving time so users can focus on what matters most.

Acknowledgements

This work involved collaborative efforts from a multidisciplinary team of software engineers, researchers, UX, and cross-functional contributors. Key contributors from Google Assistant include Alexey Galata, Alice Chuang, Barbara Wang, Britanie Hall, Gabriel Leblanc, Gloria McGee, Hideaki Matsui, James Zanoni, Joanna (Qiong) Huang, Krunal Shah, Kavitha Kandappan, Pedro Silva, Tanya Sinha, Tuan Nguyen, Vishal Desai, Will Truong, Yixing Cai, Yunfan Ye; from Research including Hao Wu, Joseph Roth, Sagar Savla, Sourish Chaudhuri, Susanna Ricco. Thanks to Yuan Yuan and Caroline Pantofaru for their leadership, and everyone on the Nest, Assistant, and Research teams who provided invaluable input toward the development of Look and Talk.