Build an end-to-end RAG solution using Knowledge Bases for Amazon Bedrock and AWS CloudFormation

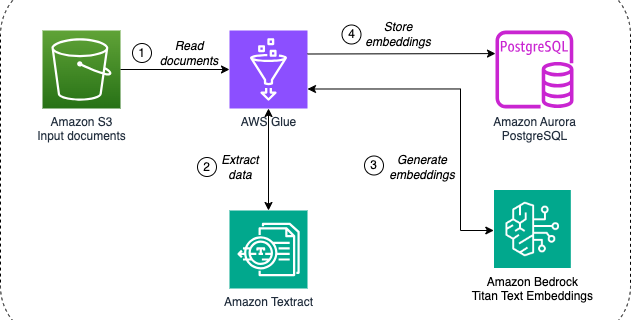

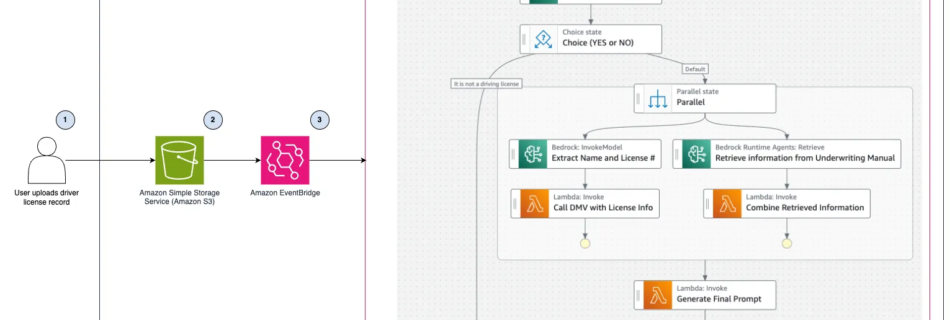

Retrieval Augmented Generation (RAG) is a state-of-the-art approach to building question answering systems that combines the strengths of retrieval and foundation models (FMs). RAG models first retrieve relevant information from a large corpus of text and then use a FM to synthesize an answer based on the retrieved information. An end-to-end RAG solution involves several …