Palantir and Edgescale AI Join Forces

This post contains forward-looking statements within the meaning of Section 27A of the Securities Act of 1933, as amended, and Section 21E of the Securities Exchange Act of 1934, as amended. These statements may relate to, but are not limited to, Palantir’s expectations regarding the amount and the terms of the contract and the expected benefits of our software platforms. Forward-looking statements are inherently subject to risks and uncertainties, some of which cannot be predicted or quantified. Forward-looking statements are based on information available at the time those statements are made and were based on current expectations as well as the beliefs and assumptions of management as of that time with respect to future events. These statements are subject to risks and uncertainties, many of which involve factors or circumstances that are beyond our control. These risks and uncertainties include our ability to meet the unique needs of our customer; the failure of our platforms to satisfy our customer or perform as desired; the frequency or severity of any software and implementation errors; our platforms’ reliability; and our customer’s ability to modify or terminate the contract. Additional information regarding these and other risks and uncertainties is included in the filings we make with the Securities and Exchange Commission from time to time. Except as required by law, we do not undertake any obligation to publicly update or revise any forward-looking statement, whether as a result of new information, future developments, or otherwise.

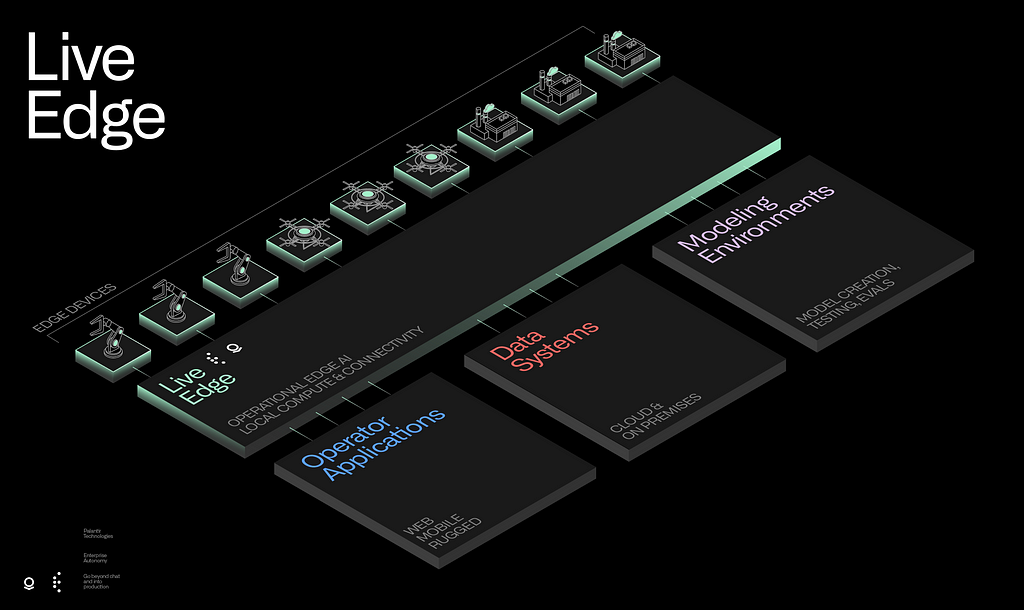

Introducing Live Edge

In today’s rapidly evolving technological landscape, enterprises use centralized cloud for integrating information, improving applications, and curating AI models on flexible infrastructure. However, the full potential of AI remains untapped in physical systems at the edge where infrastructure tends to be rigid or absent. To address this, Palantir and Edgescale AI partnered to extend the value of cloud-like infrastructure and bring AI into the physical edge ecosystem — into the real world.

Industries like manufacturing, construction, healthcare, automotive, and utilities are physical system businesses that depend on technology like machines, sensors, controllers, and networks that operates where the business does: all around us. Since the capabilities of these devices are limited, and most new investments in software, data systems, and AI are made in the centralized cloud, most applications of AI tend to overfit to cloud environments and fail to reach the physical system. A critical gap exists to access essential data, interact with devices, and apply AI to these industries.

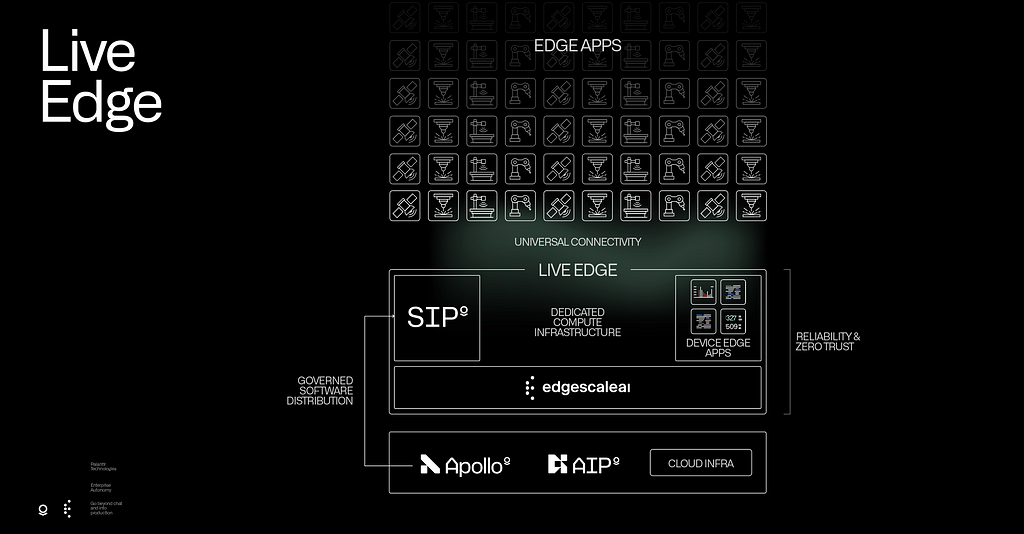

Live Edge, developed by Edgescale AI and Palantir, is a groundbreaking distribution of cloud-native software to the edge that closes this gap. Live Edge combines Palantir’s Edge AI products — Palantir Apollo and Palantir Sensor Inference Platform (SIP) — with Edgescale’s Virtual Connected Edge (VCE) cloud, to continuously deliver AI applications to the autonomous devices and the fingertips of people at the edge. We believe our partnership represents the most advanced implementation of cloud-native software over distributed infrastructure, meeting the high reliability demanded by industries. For the first time, Live Edge enables the delivery of AI applied directly to systems of action at scale.

Overcoming Challenges to Bring AI to the Edge

The implementation of AI in physical systems holds enormous potential to improve profitability and economic output. Automation of industries, such as manufacturing, allows plant owners to focus on improving productivity and activities directly related to ROI and allows plant engineers to focus on efficiency and reliability, rather than getting bogged down in protracted infrastructure builds and long-term sustainment costs. Indeed, according to McKinsey and Company, the economic potential of the Internet of Things (IoT), including physical industries, could reach over $12 trillion yearly by 2030. By comparison, a similar analysis forecasts the economic potential of Generative AI, in non-physical systems, could reach over $4 trillion yearly. Combining the power AI with data from physical systems amplifies these opportunities.

Yet, the promise of modernizing industries, sometimes referred to as Industry 4.0, has been met with mixed results. Significant investments have been made in enabling technologies such as the Internet of Things (IoT) and 5G, and while the recent advancements in generative AI mark a significant step forward, many companies still struggle to achieve success at scale in physical systems. According to the World Economic Forum, over 70% of firms investing in these technologies fail to progress beyond the pilot phase, a phenomenon known as “pilot purgatory.” Often, this is due to the complexity and cost of scaling these technologies, manual integration and maintenance steps, and siloed approaches. This failure to launch stands in stark contrast to the early days of cloud computing, when the barriers to starting new projects or companies were drastically reduced, sparking a boom in analytics projects and new ventures.

With Live Edge, Palantir and Edgescale AI are changing the game, overcoming these challenges, and are set to unlock the next phase of value acceleration in intelligent physical systems. This architecture will allow Palantir AIP customers to extend their AI applications further into operations in two ways. First, it will allow for scalable and reliable integration of devices, such as cameras, sensors, and machines into an organization’s ontology to be used across its fleet of applications and users. Second, Live Edge unlocks an entirely new mode to deploy AI applications to the edge inside the same ecosystem — allowing developers to explore new use cases and user groups.

In the remainder of this post, we share our experiences with the attributes and challenges of physical systems and explain how we developed Live Edge to address them.

Invisible Tech that Runs the World

Operational Technologies (OT) comprise the software and hardware that operate continuously as physical devices such as:

- Baseband units and routers: Continuously handle and sort packets traversing the internet and private networks.

- Programmable Logic Controllers and SCADA systems: Signal instructions to heavy machinery and robotics to manufacture goods and control energy grids.

- Video cameras and sensor systems: Collect a wide range of data on physical systems like flow rates in water pipelines or safety incidents along a road.

In contrast to the open and agile development of cloud-native software, these technologies are engineered for reliability and longevity with stringent requirements and specialized hardware. They are distributed and placed where they operate: at factories, warehouses, highway intersections, and power grid junctions — commonly referred to as “the edge.”

Although we do not often see or interact with these technologies unless we work in the respective industry, they are expansive and essential to the functioning of most businesses, cities, and countries. If the headquarters and the cloud are thought of as the brain of a business, then the eyes, ears, and fingertips are the operational technologies at the edge.

Facing the Challenges at the Source

The attributes of physical systems and OT present significant challenges in applying AI. Traditional cloud infrastructure presents a powerful platform on which to develop and train AI, but it does not run nor distribute software to physical devices and does not facilitate communication with them. As a result, we must overcome challenges at the source, such as:

- Proprietary Tech: The majority of physical devices perform highly specialized functions. Software is frequently written in low-level programming languages and pinned to custom hardware. Data formats and communication protocols are diverse. While the benefits of standard approaches in cloud-native software are known, such as containerization, micro-service architectures, APIs, and standard observability, they haven’t yet been extensively applied to the edge due to the diversity and extreme reliability demanded.

- Obsolescence: OT requires high availability — often 99.999% or greater — given that failure or downtime has severe economic and safety implications. These systems are often installed and run in harsh environments, making them expensive to create and replace. As a result, software and devices tend to age in place while cybersecurity vulnerability and obsolescence crops up.

- Reachability: Given an emphasis on reliability and security, OT is typically connected only to closed, dedicated networks and are not reachable by outside systems. Operational networks tend to have limited bandwidth and are statically defined rather than software-defined. In some cases, the networks and technologies are air-gapped for additional isolation, preventing the application of key tenets of modern reliability and security frameworks such as observability, continuous delivery, and testing.

- Absence of Infrastructure: Most operational systems are comprised of closed-stack proprietary devices, lacking general-purpose or special-purpose (e.g., GPUs) compute and network infrastructure to run additional software and AI. Add-on compute, such as IPCs, often has inferior reliability compared to primary equipment and adds considerable overhead to maintain.

- Scale: The expansive nature of these technologies results in heterogeneity and volume far exceeding what is typically seen in cloud-based software stacks, complicating the scalability of an all-encompassing solution. Managing physical devices at the edge — sometimes thousands or millions — demands immediate consideration of scalability. Partial solutions and those requiring high customization add overhead and often fail to meet expectations on return.

Live Edge

Palantir and Edgescale AI partnered to create Live Edge, a novel solution to overcome the challenges above and to distribute AI at scale in physical systems. Palantir is a market leader in productionizing AI across a customer base that includes many physical system businesses as well as defense — with some of the most challenging operational requirements. Edgescale specializes in creating virtual cloud networks, including some of the most advanced telecommunication networks and expansive cloud-native operational systems. Live Edge is based on a combination of our unique experience and hard lessons gained to date.

We designed Live Edge with three key objectives in mind:

- Mission-critical Reliability: For virtualization and intelligent automation to provide value, it must not only meet but enhance the standards of operational availability and durability.

- Security by Design: Operational technologies should not trade off security for improvement. A modern application of key principles must improve security and address significant extant vulnerabilities in critical industries.

- Fully Managed and Opinionated Infrastructure: The cost of setup, configuration, and long-term maintenance of DIY custom solutions becomes exponentially expensive. Although it may not suit every scenario initially, we use an opinionated set of functionalities to enable both deployment at scale and reduce the burden on operations teams.

These are ambitious goals: blending cloud-native agility while reaching mission-critical rigor and scale. Based on prior work, we believe our technology has reached sufficient maturity to make this achievable and, moreover, it is what’s required to close the gap.

Dedicated Cloud-like Infrastructure

The foundation of Live Edge is runtime compute for software capabilities, such as inference performed by Palantir SIP. Over time, we expect continued virtualization of software functionality of the physical systems as well (e.g., vRouter or vPLC) which may become co-located on Live Edge. We prefer the majority of our compute infrastructure to be dedicated physical hardware (e.g. servers) in identical configurations hosted near to (e.g. a local data center), yet independent from, the physical devices. This has many benefits in terms of power availability, device complexity, security, potential for redundancy, and deployment cost. Compute resources are aggregated and abstracted into a Virtual Connected Edge (VCE) environment and managed by Edgescale AI via an external, cloud-based control plane. In other words, we generate an isolated and dedicated cloud per physical system and deliver it as a managed Infrastructure-as-a-Service (IaaS).

Universal Connectivity

Connectivity is essential between elements of a cooperative physical system yet is often considered as an afterthought. We notice, to the point of frustration, when it doesn’t work. Reliable connectivity is a cornerstone of Live Edge, which addresses many management, upgrade, remote maintenance, and security challenges. Live Edge solves for reliability with redundancy: an agnostic, multi-access approach steering traffic across multiple network paths, including physical connectivity (when available), WiFi, cellular, and satellite connections. Although we are limited, at times, by device capabilities (e.g., a robotic arm must have a modem to communicate), we see standardized chipsets becoming more and more capable with a clear trend towards multiple access. Connectivity is delivered as a managed service via Live Edge to gain these benefits without adding the complexity of working with multiple independent network service providers.

Governed Cloud Access and Distribution

Given that physical systems generate orders of magnitude more data than available communications networks can accommodate, a primary objective of Live Edge is to bring software and AI from the cloud to the data in the physical system. In doing so, we benefit from rapid advancements in software in the cloud, augment capabilities, and remediate vulnerabilities with high velocity. Live Edge employs Palantir’s Apollo framework to distribute software across a given VCE up to and onto physical devices. Apollo maintains a strict governance of distribution with dependency graphs so that changes don’t result in disruption or downtime to operational systems.

Reliability and Zero-Trust

The heterogeneity and distribution of physical systems present extreme challenges to reliability and a complex attack surface: in many respects, these attributes and requirements define the operational edge as it exists today. Live Edge is rooted in an application of zero-trust principles. We assume devices in a physical system can and will be compromised. Live Edge actively manages and abstracts both compute and connectivity resources of the VCE, in much the same way as the cloud, and applies end-to-end encryption, certificates, and restricted connectivity and access on a per-flow basis. Live Edge maintains strict governance on the distribution of software and autonomously tests for degradation of performance and compliance.

We see reliability and security as two edges of the same coin. These approaches, applied consistently across software and devices, produce marked improvement in both reliability and security compared to ad hoc and snowflake implementations.

A New Era of Edge AI

The modernization and application of AI to physical systems is inevitable. Despite the inherent challenges, Palantir and Edgescale AI are committed to leading this transformation with Live Edge. With tens of millions of combined investment and a multi-year partnership, we believe we offer the most advanced and scalable framework to date, featuring breakthrough advancements in security, reliability, manageability, and software delivery velocity.

This is just the beginning of the journey to bridge the gap between revolutionary AI advancements and billions of physical devices. We are excited to partner with other technology companies developing physical system devices, models, and solutions and to continue improving Live Edge as we implement new use cases for enterprises globally.

Ready to start building?

Contact us: LiveEdge@Palantir.com

Revolutionizing Edge AI was originally published in Palantir Blog on Medium, where people are continuing the conversation by highlighting and responding to this story.