Developers building with gen AI are increasingly drawn to open models for their power and flexibility. But customizing and deploying them can be a huge challenge. You’re often left wrestling with complex dependencies, managing infrastructure, and fighting for expensive GPU access.

Don’t let that complexity slow you down.

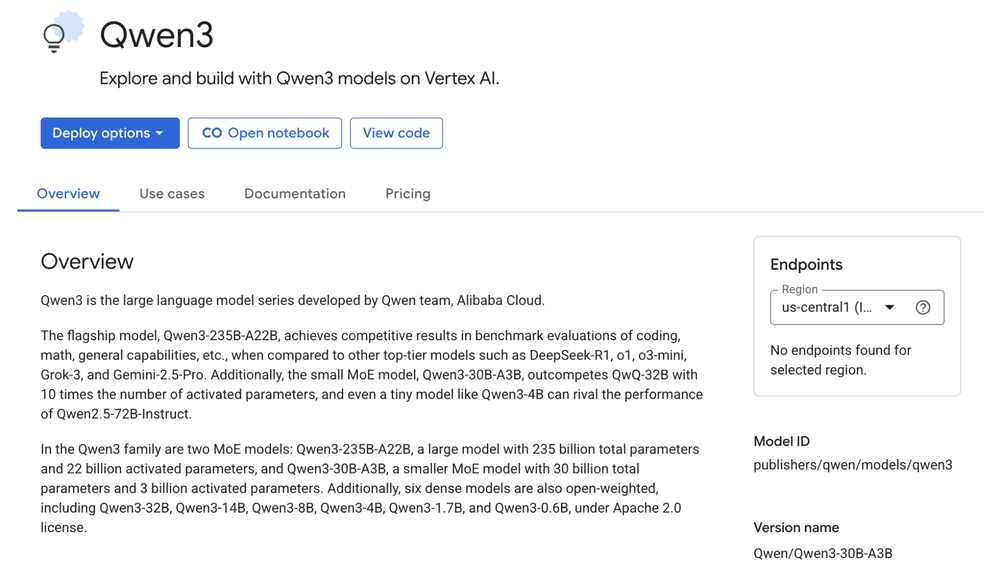

In this guide, we’ll walk you through the end-to-end lifecycle of taking an open model from discovery to a production-ready endpoint on Vertex AI. In this blog post, we will use fine-tuning and deploying Qwen3 as our example, showing you how to handle the heavy lifting so you can focus on innovation.

Part 1: Quickly choose the right base model

So you’ve decided to use an open model for your project: which model, on what hardware, and which serving framework? The open model universe is vast, and the “old way” of finding the right model is time consuming. You could spend days setting up environments, downloading weights, and wrestling with requirements.txt files just to run a single test.

This is a common place for projects to stall. But with Vertex AI, your journey starts in a much better place: the Vertex AI Model Garden, a curated hub that simplifies the discovery, fine-tuning and deployment of cutting-edge open models. With over 200+ validated options (and growing!) including popular choices like Gemma, Qwen, DeepSeek, and Llama. Comprehensive model cards offer crucial information, including details on recommended hardware (such as GPU types and sizes) for optimal performance. Additionally, Vertex AI has default quotas for dedicated on-demand capacity of the latest Google Cloud accelerators to make it easier to get started.

Qwen 3 Model card on Vertex AI Model Garden

Importantly, Vertex AI conducts security scans on these models and their containers, which gives you an added layer of trust and mitigating potential vulnerabilities from the outset. Once you found a model, like Qwen3, for your use case, Model Garden provides one-click deployment options or pre-configured notebooks (code) making it easy to deploy the model as an endpoint using Vertex AI inference Service, ready to be integrated into your application.

Qwen3 Deployment options from Model Garden

Additionally, Model Garden provides optimized serving containers—often leveraging vLLM or SGLang, or Hex-LLM for high-throughput inference — specifically designed for performant model serving. Once your model is deployed (via an experimental endpoint or notebook) you can start experimenting and establishing a baseline for your use case. This baseline lets us benchmark our fine-tuned model later on.

Model Inference framework options

Qwen3 quick deployment on Endpoint

It’s important that you incorporate evaluation early on in the process. You can leverage Vertex AI’s Gen AI evaluation service to assess the model against your own data and criteria, or integrate open-source frameworks. This essential early validation ensures you confidently select the right base model.

By the end of this experimentation and research phase, you’ll have efficiently navigated from model discovery to initial evaluation ready for the next step.

Part 2: Start parameter efficient fine-tuning (PEFT) with your data

You’ve found your based model – in this case Qwen3. Now for the magic: making it yours by fine-tuning it on your specific data. This is where you can give the model a unique personality, teach it a specialized skill, or adapt it to your domain.

Step 1: Get your data ready

First you need to get your data ready. Reading data can often be a bottleneck, but Vertex AI makes it simple. You can seamlessly pull your datasets directly from Google Cloud Storage (GCS) and BigQuery (BQ). For more complex data-cleaning and preparation tasks, you can build an automated Vertex AI Pipeline to orchestrate the preprocessing work for you.

Step 2: Hands-on tuning in the notebook

Now you can start fine-tuning your Qwen3 model. For Qwen3, the Model Garden provides a pre-configured notebook that uses Axolotl, a popular framework for fine-tuning. This notebook already includes optimized settings for techniques like:

QLoRA: A highly memory-efficient tuning method, perfect for running experiments without needing massive GPUs.

FSDP (Fully shared data parallelism): A technique for distributing a large model across multiple GPUs for larger scale training.

You can run the Qwen3 fine-tuning process directly inside the notebook. This is the perfect “lab environment” for quick experiments to discover the right configuration for the fine-tuning job.

Step 3: Scaling up with Vertex AI training

Experimenting and getting started in a notebook is great, but you might need more GPU resources and flexibility for customization. This is when you graduate from the notebook to a formal Vertex AI Training job.

Instead of being limited by a single notebook instance, you submit your training configuration (using the same container) to Vertex AI’s managed training service offering more scalability, flexibility and control. Here’s what that gives you:

On-demand accelerators: Access an on-demand pool of the latest accelerators (like H100s) when you need them or choose DWS Flex start, spot GPUs, BYO-reservation options for more flexibility or stability.

Managed infrastructure: No need to provision or manage servers or containers. Vertex AI handles it all. You just define your job, and it runs.

Reproducibility: Your training job is a repeatable artifact, making it easier to be used in a MLOps workflow.

Once your job is running, you can monitor its progress in real-time with TensorBoard to watch your model’s loss and accuracy improve. You can also check in on your tuning pipeline.

Beyond using the Vertex AI Training Job you can go with Ray on Vertex or DIY on GKE or GCE based on flexibility and control needed.

Part 3: Evaluate your fine-tuned model

After fine-tuning your Qwen3 model on Vertex AI, robust evaluation is crucial to assess its readiness. You compare the evaluation results to your baseline created during experimentation.

For complex generative AI tasks, Vertex AI’s Gen AI Evaluation Service uses a ‘judge’ model to assess nuanced qualities (coherence, relevance, groundedness) and task-specific criteria, supporting side-by-side (SxS) human reviews. Using the GenAI SDK, you can programmatically evaluate and compare your models. This service provides deep, actionable insights into model performance—going far beyond simple metrics like perplexity by also incorporating automated side-by-side comparisons and human review.

In the evaluation notebook, We evaluated our fine-tuned Qwen3 model against the base model using the GenAI Evaluation Service. For each query, we provided responses from both models and used the pairwise_summarization_quality metric to let the judge model determine which performed better.

For evaluation on other popular models, refer to this notebook

- aside_block

- <ListValue: [StructValue([(‘title’, ‘$300 in free credit to try Google Cloud AI and ML’), (‘body’, <wagtail.rich_text.RichText object at 0x3e42bd06a580>), (‘btn_text’, ‘Start building for free’), (‘href’, ‘http://console.cloud.google.com/freetrial?redirectPath=/vertex-ai/’), (‘image’, None)])]>

Part 4: Deploy to a production endpoint

Your model has been fine-tuned and validated. It’s time for the final, most rewarding step: deploying it as an endpoint. This is where many projects hit a wall of complexity. With Vertex AI inference it’s a streamlined process. When you deploy to a Vertex AI Endpoint, you’re not just getting a server; you’re getting a fully managed, production-grade serving stack optimized for two key things:

1. Fast performance

Optimized serving: Your model is served using a container built with cutting-edge frameworks like vLLM, ensuring high throughput and low latency.

Rapid start-up: Techniques like fast VM startup, container image streaming, model weight streaming, and prefix caching mean your model can start up quickly.

2. Cost-effective and flexible scaling

You have full control over your GPU budget. You can:

Use on-demand GPUs for standard workloads.

Apply existing Committed Use Discounts (CUDs) and reservations to lower your costs.

Use Dynamic Workload Scheduler (DWS) Flex Start to acquire capacity for up to 7 days at a discount.

Leverage Spot VMs for fault-tolerant workloads to get access to compute at a steep discount.

In short, Vertex AI Inference handles the scaling, the infrastructure, and the performance optimization. You just focus on your application.

Get started

Successfully navigating the lifecycle of an open model like Qwen on Vertex AI, from initial idea to production-ready endpoint, is a significant achievement. You’ve seen how the platform provides robust support for experimentation, fine-tuning, evaluation, and deployment.

Want to explore your own open model workload? The Vertex AI Model Garden is a great place to start.