We recently announced the support for Agent-to-Agent (A2A) protocol on Amazon Bedrock AgentCore Runtime. With this addition, agents can discover peers, share capabilities, and coordinate actions across platforms using standardized communication.

Amazon Bedrock AgentCore Runtime provides a secure, serverless environment designed for deploying AI agents and tools. It works with any framework and model, supports real-time and long-running workloads, and supports session isolation with built-in authentication. With support for MCP, and now the A2A protocol, Bedrock AgentCore Runtime enables seamless communication between agents. Agents built using different frameworks, Strands Agents, OpenAI Agents SDK, LangGraph, Google ADK, or Claude Agents SDK, can share context, capabilities, and reasoning in a common, verifiable format.

In this post, we demonstrate how you can use the A2A protocol for AI agents built with different frameworks to collaborate seamlessly. You’ll learn how to deploy A2A servers on AgentCore Runtime, configure agent discovery and authentication, and build a real-world multi-agent system for incident response. We’ll cover the complete A2A request lifecycle, from agent card discovery to task delegation, showing how standardized protocols eliminate the complexity of multi-agent coordination.

Understanding multi-agent systems

Building effective agentic systems requires several foundational components. These include memory, both short-term for maintaining conversation context and long-term for retaining insights across sessions; tools that agents can access either natively or through MCP servers; identity for more secure authentication and permission management, allowing agents to act on behalf of users or autonomously access resources; and guardrails to detect harmful content, help prevent hallucinations, and make sure responses align with policies and factual accuracy.

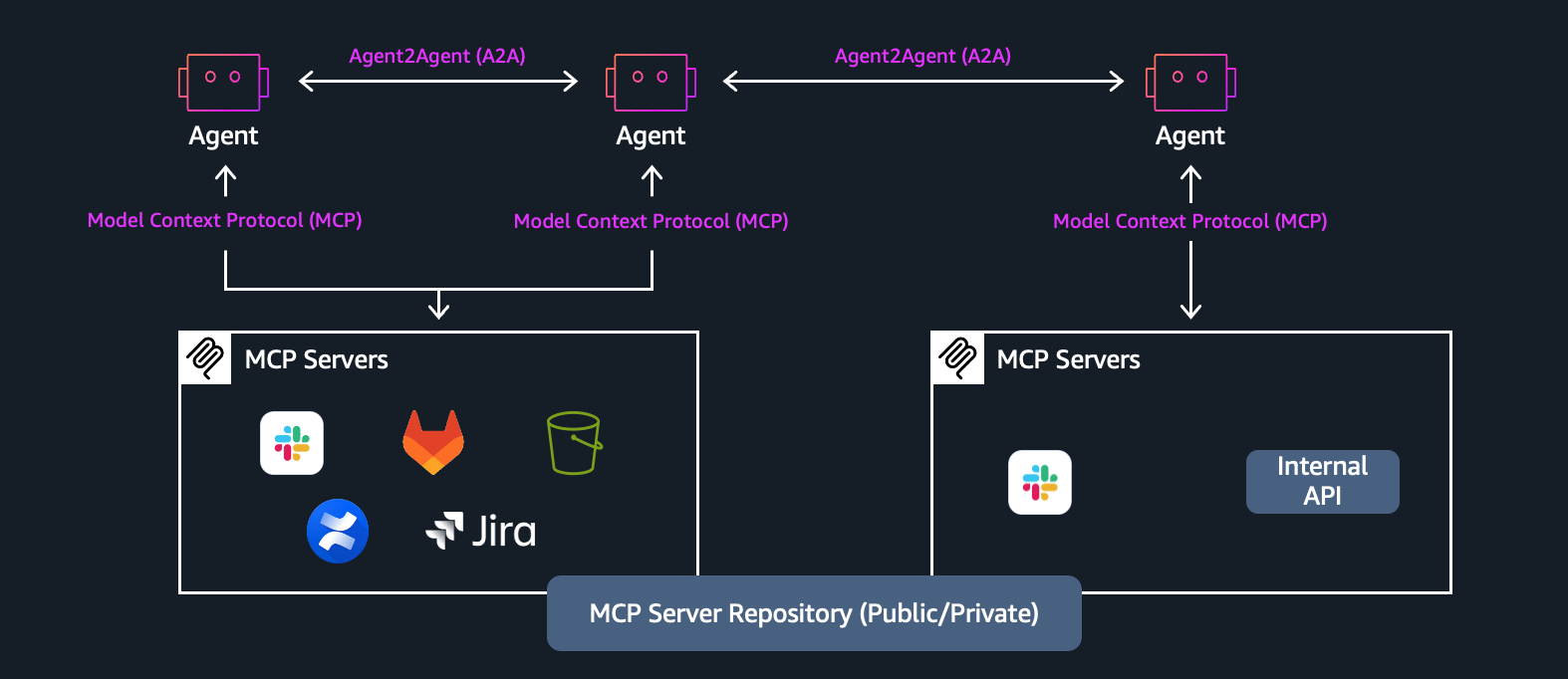

While MCP connects a single agent to its tools and data, A2A lets multiple agents coordinate with one another. For example, a retail inventory agent might use MCP to query product databases, then use A2A to communicate with external supplier agents to place orders.

The A2A protocol brings benefits to multi-agent systems through seamless interoperability across diverse boundaries. Agents built with different frameworks like Strands or OpenAI, powered by various LLMs such as Anthropic Claude, GPT-4, or Llama, and hosted on different systems including AWS or edge devices can communicate and coordinate effortlessly without requiring complex translation layers. This interoperability is complemented by loose coupling and modularity, where each agent operates as an independent unit that can be developed, tested, deployed, and even upgraded without disrupting the entire system. New specialized agents can join the environment seamlessly, and the failure of one agent remains isolated due to well-defined interaction boundaries, helping prevent cascading failures across the system. The protocol also supports dynamic agent discovery and orchestration. Agents advertise their capabilities through standardized schemas while orchestrator agents can discover and invoke specialized agents based on real-time task requirements.

A2A request lifecycle on Amazon Bedrock AgentCore Runtime

The A2A protocol defines a structured request lifecycle with specific components that work together to coordinate multi-agent communication. Here are the key elements:

- User: Initiates requests through the Client Agent, either as a human operator or automated service defining goals that require multi-agent assistance.

- A2A Client (Client Agent): Acts on behalf of the user, initiating communication using the A2A protocol to discover and request tasks from remote agents.

- A2A Server (Remote Agent): Exposes HTTP endpoints implementing the A2A protocol to receive requests, process tasks, and return results. Different agents can serve this role, handling both synchronous and asynchronous interactions using JSON-RPC 2.0 over HTTP/S or Server-Sent Events.

- Agent Card: A JSON metadata file that each agent publishes to advertise its identity, capabilities, endpoints, and authentication requirements. This enables the dynamic discovery feature, where agents query what their peer agents can do before delegating tasks.

- Task Object: Represents each unit of work flowing through the system with a unique ID and lifecycle. As agents coordinate, tasks may be long-running, involve multiple turns, and span several agents working together.

- Artifact: The output produced when a task completes, which can include structured text, JSON, images, audio, or other multimodal content. Agents exchange these artifacts as they collaborate to fulfill the user’s original request.

Multi-agent use case: Monitoring and incident response

To demonstrate the power of multi-agent systems using A2A on Amazon Bedrock AgentCore Runtime, we’ll walk through an enterprise monitoring and incident response solution. This real-world use-case showcases how specialized agents built with different frameworks coordinate seamlessly to handle complex operational challenges through the A2A protocol.

The monitoring and incident response solution implements a hub-and-spoke architecture with three specialized agents, each using Amazon Bedrock AgentCore features – modular building blocks that provide core capabilities like AgentCore Memory for context-aware responses, AgentCore Identity using Amazon Cognito for more secure authentication for agents and what action each agent can perform, AgentCore Gateway for more secure and centralized access to tools, and observability to trace, debug, and monitor AI agents’ performance. View the architecture and demonstration video below for reference:

The multi-agent system contains the following components:

- Host agent (Google ADK): Acts as the intelligent routing layer and coordination hub for the agent interactions. Demonstrates the cross-system interoperability using A2A. This agent runs on Amazon Bedrock AgentCore Runtime using Google’s Agent Development Kit, yet communicates seamlessly with agents hosted on AWS through the standardized A2A protocol. Key responsibilities of the host agent include:

- Dynamic agent discovery: Fetches Identity Provider (IDP) configuration from AWS Systems Manager Parameter Store for each remote agent, enabling more secure authentication across the multi-agent system

- Capability awareness: Retrieves agent cards from each A2A server to understand available skills and endpoints

- Intelligent routing: Analyzes user queries and routes them to the appropriate specialist agent based on capabilities

- Multi-agent coordination: Orchestrates complex workflows requiring multiple agents

- Monitoring agent (Strands Agents SDK): Serves as the operational intelligence layer, continuously analyzing CloudWatch logs, metrics, dashboards, and alarms across AWS services. This agent specializes in identifying anomalies, tracking error patterns, and surfacing actionable insights from vast amounts of telemetry data. When unusual patterns emerge, the monitoring Agent initiates conversations with other specialized agents to coordinate response actions.Key responsibilities of the monitoring agent include:

- CloudWatch integration:

- Lists and analyzes CloudWatch dashboards

- Fetches logs for specific AWS services (Lambda, ECS, EC2)

- Monitors alarms and alert states

- Analyzes log groups for patterns and errors

- Cross-account access: Supports monitoring across multiple AWS accounts

- CloudWatch integration:

- Operational agent (OpenAI SDK): Provides remediation strategies and external knowledge integration. When the monitoring agent detects a critical issue, it communicates directly with the operational agent through A2A, providing context about the problem and requesting specific remediation actions. Key responsibilities of the operational agent include:

- Web search: Uses Tavily API to search for AWS best practices, troubleshooting guides, and solutions

- Remediation strategies: Proposes solutions based on detected issues

Implementing the multi-agent monitoring solution

Now that we’ve explored how these three specialized agents collaborate to handle AWS incidents, let’s walk through how to build and deploy this multi-agent system using Amazon Bedrock AgentCore Runtime.

The implementation follows a progressive approach:

- Start with the foundation – We’ll deploy a simple A2A server to understand the core mechanics of agent deployment, authentication, and invocation on AgentCore Runtime

- Build the monitoring system – Using the same deployment patterns, we’ll construct each specialized agent (Monitoring, Operational, and Host) with their specific tools and capabilities

- Connect the agents – Configure A2A communication channels between agents, enabling them to discover and invoke each other through standardized protocols

- Observe the system in action – Watch the demo video showing real-time incident detection, cross-agent coordination, and automated response

All code examples, complete agent implementations, and deployment scripts for this multi-agent monitoring system are available in our GitHub repository.

Getting started with A2A on AgentCore Runtime

To understand the fundamentals of deploying A2A servers on Amazon Bedrock AgentCore Runtime, including step-by-step instructions for creating, testing, deploying, and invoking agents, refer to the A2A Protocol Support documentation. This guide covers:

- Creating and configuring A2A servers with any framework (Strands, OpenAI SDK, LangGraph)

- Local testing and validation

- Deployment using the AgentCore CLI

- Authentication setup (OAuth 2.0 and AWS IAM)

- Agent Card retrieval and discovery

- Client implementation for invoking deployed agents

Once you’re familiar with these fundamentals, you can apply the same patterns to build each component of the multi-agent monitoring system.

View the full example in this GitHub sample. For this post, we will focus on this use case implementation.

Prerequisites

To deploy the multi-agent monitoring system implementation, follow the prerequisite steps:

- AWS account: You need an active AWS account with appropriate permissions

- AWS CLI: Install and configure AWS CLI with your credentials

- Install uv.

- Supported Regions: This solution is currently tested and supported in the following AWS Regions.

Note: To deploy in other Regions, you’ll need to update the DynamoDB prefix list mappings in cloudformation/vpc-stack.yaml. See the VPC Stack documentation for details.

Deployment steps

This guide walks you through deploying a multi-agent system on AWS using infrastructure-as-code. The easiest way to deploy this solution is using our automated deployment script:

Step 1: Clone the repository

Step 2: Run the deployment script

This deployment script will verify that the AWS CLI is installed and configured, check if the AWS credentials are valid, confirm that the Region is set to us-west-2, interactively collect the required parameters, generate unique S3 bucket names and automatically deploy all stacks in the correct order. The approximate deployment time is 10-15 minutes.

Step 3: Provide the runtime CLI parameters

Next, provide the parameters used at deployment. Press enter for each of the options to use the default Amazon Bedrock model ID and the CloudFormation stack names for each of the agents.

API keys: You’ll need the following API keys (the deployment script will prompt for these):

- OpenAI API key: Get it from OpenAI Platform

- Tavily API key: Get it from Tavily

- Google API key: Get it from Google AI Studio

Once you have configured the information, start the deployment process and track it below in the AWS Console and terminal respectively.

Step 4: Provide the runtime CLI parameters

Run the frontend using following commands. This sets up and runs the React frontend UI that allows users to interact with the multi-agent incident response system for monitoring AWS infrastructure, querying CloudWatch metrics and logs, and searching for remediation strategies through the coordinated A2A agents.

This deployment creates a multi-agent A2A system with three specialized AI agents running on Amazon Bedrock AgentCore Runtime and orchestrated using the A2A protocol. The Cognito stack provisions OAuth 2.0-based machine-to-machine authentication by creating a Cognito user pool with four distinct client applications (WebSearch, Monitoring, Gateway, and Host Agent clients).

The monitoring agent (built with the Strands SDK) connects to CloudWatch metrics and logs through an AgentCore Gateway using a Smithy model definition, with custom semantic memory strategies for incident tracking.

The operations agent (built with OpenAI Agents SDK) interfaces with Tavily API for remediation research and the host agent (built with Google ADK) acts as the coordinator using HTTP protocol to delegate tasks to the two specialized A2A agents.

End-to-end incident response workflow

In this section, we will walk through an end-to-end workflow where the host agent manages conversations, gets the requirements from the user, and selects the best agent to route the request to (monitoring or operations agent). The monitoring and operations agent expose their agent cards that is used by the host agent for orchestration. In this example, we will test with simple error analysis from various log groups and search for remediation strategies.

The workflow includes the following steps:

- Initial greeting: The user sends a greeting message asking “Hi! How are you?” to the host agent. The host agent processes the request. The host agent responds back to the user with a friendly greeting saying “I’m doing well, thank you!”

- Capabilities query: The user asks the host agent “What are your capabilities?” to understand what the agent can do. The host agent explains to the user that it is an orchestration agent designed for AWS monitoring and operations based on the remote agent connections that it has access to.

- List log groups and dashboards: The user requests the host agent to list the log groups and dashboards in their AWS account. The host agent recognizes this is a monitoring task and executes the

transfer_to_agenttool to delegate the work. The request is transferred from the host agent to the monitoring agent for specialized handling. The monitoring agent uses the Agent-to-Agent (A2A) Json RPC Transport protocol to communicate. The monitoring agent retrieves the information and returns results showing 0 dashboards and 153 log groups found in the account. The host agent receives the results from the monitoring agent and displays the dashboards and log groups information to the user. - Analyze specific log group: The user requests the host agent to look for errors in a specific log group at path

/aws/bedrock-agentcore/runtimes/hostadk-<runtimeId>-DEFAULT. The host agent determines this requires monitoring expertise and executes thetransfer_to_agenttool. The request is transferred to the monitoring agent with instructions to analyze the specified log group for errors. The monitoring agent analyzes the log group and discovers 9 errors and 18 warnings, specifically identifying OTLP Export Failures. The host agent receives the analysis results and displays a detailed error analysis report to the user. - Debug and fix recommendations: The user asks the host agent to debug the errors and provide a report on the fixes needed. The request is transferred to the operations agent to search for solutions related to OTLP export failures. The operations agent uses A2A JsonRPC Transport to attempt the search and performs web search to provide a solution.

Security with A2A on Amazon Bedrock AgentCore Runtime

Amazon Bedrock AgentCore Runtime supports two authentication methods for securing A2A communication:

OAuth 2.0 authentication: The A2A client authenticates with an external authorization server to obtain a JSON Web Token (JWT), which is then included with all requests to the A2A server. This token-based approach enables secure, standardized authentication using either machine-to-machine (M2M) credentials or user federation, allowing the A2A server to verify the client’s identity and enforce access controls based on the token’s claims.

AWS IAM authentication: The A2A client assumes an IAM role with permissions to invoke the A2A server’s agent. This approach leverages AWS SigV4 request signing and IAM policies to control access, alleviating the need for external token management while providing fine-grained permissions.

What is supported in Amazon Bedrock AgentCore Runtime with A2A

Amazon Bedrock AgentCore Runtime provides comprehensive support for A2A communication. View some of the capabilities supported:

- Stateless server: Amazon Bedrock AgentCore Runtime can host A2A servers that expose an HTTP interface, running a stateless HTTP server on port 9000 and supporting JSON-RPC messaging. The runtime acts as a transparent proxy, passing JSON-RPC requests and responses unchanged to preserve protocol fidelity.

- Authenticated agent cards: Supports authenticated agent card at

/.well-known/agent-card.jsoncontaining its capabilities & skills allowing other agents to discover it automatically. - Authentication with secure inbound auth: Amazon Bedrock AgentCore Runtime supports secure authentication via AWS SigV4 and OAuth 2.0, making sure the agent-to-agent communication is authorized and secure. The A2A server authenticates every incoming request using the credentials provided in the HTTP headers, leveraging Amazon Bedrock AgentCore Identity.

- Authorization with secure outbound auth: Amazon Bedrock AgentCore Runtime enables secure outbound authorization through both IAM execution roles and AgentCore Identity. Each agent assumes a defined IAM execution role, granting it the necessary permissions to access AWS resources more securely. For interactions with external services, agents can use Amazon Bedrock AgentCore Identity, which provides managed OAuth 2.0 support for third-party identity providers such as Google, GitHub, Slack, and more.

- VPC connectivity: You can configure Amazon Bedrock AgentCore Runtime to connect to resources in your Amazon Virtual Private Cloud (VPC). By configuring VPC connectivity, you enable secure access to private resources such as databases, internal APIs, and services within your VPC.

- Leverage AWS PrivateLink: Amazon Bedrock AgentCore enables secure, private connections between your Virtual Private Cloud (VPC) and AgentCore services using AWS PrivateLink. By creating interface VPC endpoints, you can keep A2A server communication within your VPC without traversing the public internet.

- Lifecycle management: Amazon Bedrock AgentCore Runtime lets you configure lifecycle rules to manage resource usage with

idleRuntimeSessionTimeoutandmaxLifetime. Idle or long-running sessions are automatically terminated for efficient resource utilization and to maintain system performance.

Conclusion

The Agent-to-Agent protocol support in Amazon Bedrock AgentCore Runtime provides the support for building scalable, interoperable multi-agent systems. By providing standardized communication between AI agents, regardless of their underlying framework, model, or hosting infrastructure, organizations can compose sophisticated agentic solutions with the A2A protocol. The AWS monitoring and incident response example demonstrates the practical power of this approach: a Google ADK-based orchestrator coordinating with Strands and OpenAI SDK agents, all deployed on AgentCore Runtime, working together to detect issues, search for solutions, and recommend fixes. This level of interoperability would traditionally require extensive custom integration work, but A2A makes it straightforward through standardized protocols.As AI systems continue to evolve from single-purpose tools to collaborative environments, protocols like A2A and MCP become essential building blocks. They create a future where agents can be discovered, composed, and orchestrated dynamically, enabling organizations to build once and integrate anywhere.

About the authors

Madhur Prashant is an Applied Generative AI Architect at Amazon Web Services. He is passionate about the intersection of human thinking and Agentic AI. His interests lie in generative AI, cognitive science and specifically building solutions that are helpful and harmless, and most of all optimal for customers. Outside of work, he loves doing yoga, hiking, spending time with his twin and playing the guitar.

Madhur Prashant is an Applied Generative AI Architect at Amazon Web Services. He is passionate about the intersection of human thinking and Agentic AI. His interests lie in generative AI, cognitive science and specifically building solutions that are helpful and harmless, and most of all optimal for customers. Outside of work, he loves doing yoga, hiking, spending time with his twin and playing the guitar.

Eashan Kaushik is a Specialist Solutions Architect AI/ML at Amazon Web Services. He is driven by creating cutting-edge generative AI solutions while prioritizing a customer-centric approach to his work. Before this role, he obtained an MS in Computer Science from NYU Tandon School of Engineering. Outside of work, he enjoys sports, lifting, and running marathons.

Eashan Kaushik is a Specialist Solutions Architect AI/ML at Amazon Web Services. He is driven by creating cutting-edge generative AI solutions while prioritizing a customer-centric approach to his work. Before this role, he obtained an MS in Computer Science from NYU Tandon School of Engineering. Outside of work, he enjoys sports, lifting, and running marathons.

Sriharsha M S is a Principal Gen AI specialist solution architect in the Strategic Specialist team at Amazon Web Services. He works with strategic AWS customers who are taking advantage of AI/ML to solve complex business problems. He provides technical guidance and design advice to foundational model science and agentic AI applications at scale. His expertise spans application hardware accelerators, architecture, big data, analytics and machine learning.

Sriharsha M S is a Principal Gen AI specialist solution architect in the Strategic Specialist team at Amazon Web Services. He works with strategic AWS customers who are taking advantage of AI/ML to solve complex business problems. He provides technical guidance and design advice to foundational model science and agentic AI applications at scale. His expertise spans application hardware accelerators, architecture, big data, analytics and machine learning.

Jeffrey Burke is an Applied Generative AI Solutions Architect at Amazon Web Services (AWS), where he specializes in designing and implementing cutting-edge generative AI solutions for enterprise customers. With a passion for teaching complex technologies, he focuses on translating sophisticated AI concepts into practical, scalable solutions that drive business value. He has a MS in Data Science and BS in Chemical Engineering.

Jeffrey Burke is an Applied Generative AI Solutions Architect at Amazon Web Services (AWS), where he specializes in designing and implementing cutting-edge generative AI solutions for enterprise customers. With a passion for teaching complex technologies, he focuses on translating sophisticated AI concepts into practical, scalable solutions that drive business value. He has a MS in Data Science and BS in Chemical Engineering.

Shreyas Subramanian is a Principal Data Scientist and helps customers by using Generative AI to solve their business challenges using the AWS platform. Shreyas has a background in large scale optimization and Deep Learning, and he is a researcher studying the use of Machine Learning and Reinforcement Learning for accelerating learning and optimization tasks. Shreyas is also an Amazon best-selling book author with several research papers and patents to his name.

Shreyas Subramanian is a Principal Data Scientist and helps customers by using Generative AI to solve their business challenges using the AWS platform. Shreyas has a background in large scale optimization and Deep Learning, and he is a researcher studying the use of Machine Learning and Reinforcement Learning for accelerating learning and optimization tasks. Shreyas is also an Amazon best-selling book author with several research papers and patents to his name.

Andy Palmer is a Director of Technology for AWS Strategic Accounts. His teams provide Specialist Solutions Architecture skills across a number of speciality domain areas, including AIML, generative AI, data and analytics, security, network, and open source software. Andy and his team have been at the forefront of guiding our most advanced customers through their generative AI journeys and helping to find ways to apply these new tools to both existing problem spaces and net new innovations and product experiences.

Andy Palmer is a Director of Technology for AWS Strategic Accounts. His teams provide Specialist Solutions Architecture skills across a number of speciality domain areas, including AIML, generative AI, data and analytics, security, network, and open source software. Andy and his team have been at the forefront of guiding our most advanced customers through their generative AI journeys and helping to find ways to apply these new tools to both existing problem spaces and net new innovations and product experiences.

Sayee Kulkarni is a Software Development Engineer on the AWS Bedrock AgentCore service. Her team is responsible for building and maintaining the AgentCore Runtime platform, a foundational component that enables customers to leverage agentic AI capabilities. She is driven by delivering tangible customer value, and this customer-centric focus motivates her work. Sayee played a key role in designing and launching Agent-to-Agent (A2A) capabilities for AgentCore, empowering customers to build sophisticated multi-agent systems that autonomously collaborate to solve complex business challenges.

Sayee Kulkarni is a Software Development Engineer on the AWS Bedrock AgentCore service. Her team is responsible for building and maintaining the AgentCore Runtime platform, a foundational component that enables customers to leverage agentic AI capabilities. She is driven by delivering tangible customer value, and this customer-centric focus motivates her work. Sayee played a key role in designing and launching Agent-to-Agent (A2A) capabilities for AgentCore, empowering customers to build sophisticated multi-agent systems that autonomously collaborate to solve complex business challenges.