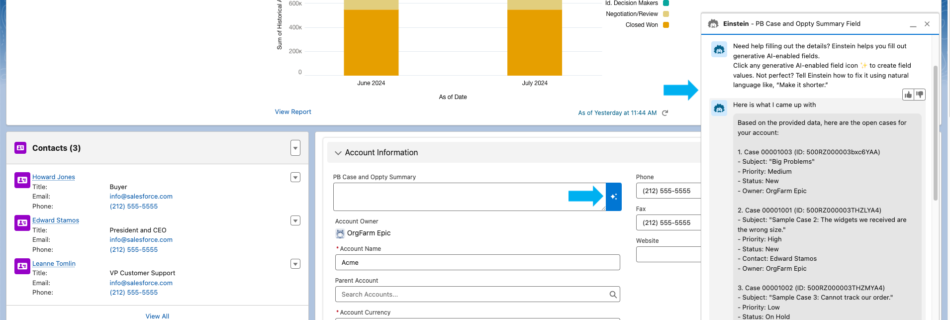

Build generative AI–powered Salesforce applications with Amazon Bedrock

This post is co-authored by Daryl Martis and Darvish Shadravan from Salesforce. This is the fourth post in a series discussing the integration of Salesforce Data Cloud and Amazon SageMaker. In Part 1 and Part 2, we show how Salesforce Data Cloud and Einstein Studio integration with SageMaker allows businesses to access their Salesforce data …

Read more “Build generative AI–powered Salesforce applications with Amazon Bedrock”