Generative AI is a type of AI that can create new content and ideas, including conversations, stories, images, videos, and music. It’s powered by large language models (LLMs) that are pre-trained on vast amounts of data and commonly referred to as foundation models (FMs).

With the advent of these LLMs or FMs, customers can simply build Generative AI based applications for advertising, knowledge management, and customer support. Realizing the impact of these applications can provide enhanced insights to the customers and positively impact the performance efficiency in the organization, with easy information retrieval and automating certain time-consuming tasks.

With generative AI on AWS, you can reinvent your applications, create entirely new customer experiences, and improve overall productivity.

In this post, we build a secure enterprise application using AWS Amplify that invokes an Amazon SageMaker JumpStart foundation model, Amazon SageMaker endpoints, and Amazon OpenSearch Service to explain how to create text-to-text or text-to-image and Retrieval Augmented Generation (RAG). You can use this post as a reference to build secure enterprise applications in the Generative AI domain using AWS services.

Solution overview

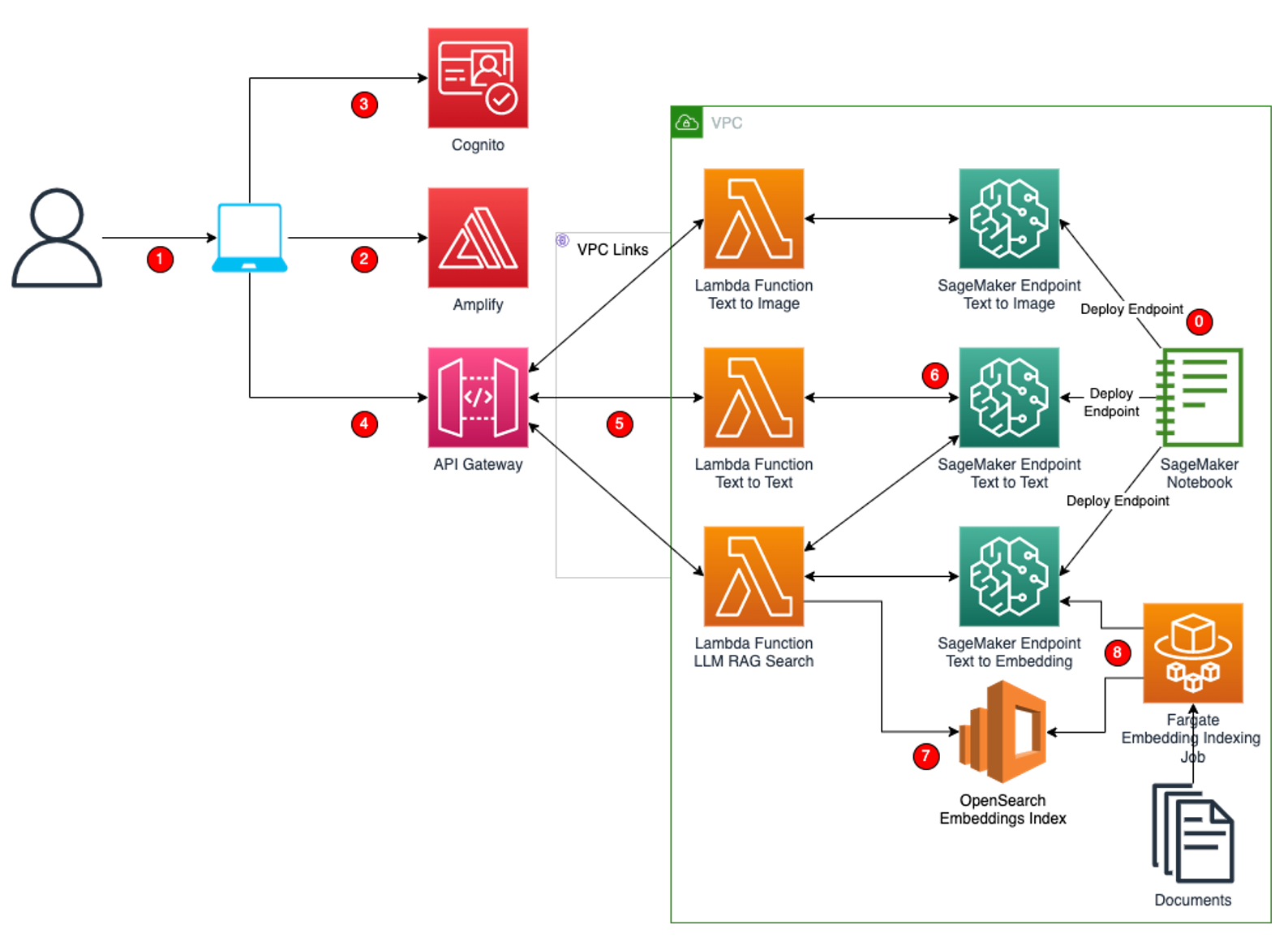

This solution uses SageMaker JumpStart models to deploy text-to-text, text-to-image, and text embeddings models as SageMaker endpoints. These SageMaker endpoints are consumed in the Amplify React application through Amazon API Gateway and AWS Lambda functions. To protect the application and APIs from inadvertent access, Amazon Cognito is integrated into Amplify React, API Gateway, and Lambda functions. SageMaker endpoints and Lambda are deployed in a private VPC, so the communication from API Gateway to Lambda functions is protected using API Gateway VPC links. The following workflow diagram illustrates this solution.

The workflow includes the following steps:

- Initial Setup: SageMaker JumpStart FMs are deployed as SageMaker endpoints, with three endpoints created from SageMaker JumpStart models. The text-to-image model is a Stability AI Stable Diffusion foundation model that will be used for generating images. The text-to-text model used for generating text and deployed in the solution is a Hugging Face Flan T5 XL model. The text-embeddings model, which will be used for generating embedding to be indexed in Amazon OpenSearch Service or searching the context for the incoming question, is a Hugging Face GPT 6B FP16 embeddings model. Alternative LLMs can be deployed based on the use case and model performance benchmarks. For more information about foundation models, see Getting started with Amazon SageMaker JumpStart.

- You access the React application from your computer. The React app has three pages: a page that takes image prompts and displays the image generated; a page that takes text prompts and displays the generated text; and a page that takes a question, finds the context matching the question, and displays the answer generated by the text-to-text model.

- The React app built using Amplify libraries are hosted on Amplify and served to the user in the Amplify host URL. Amplify provides the hosting environment for the React application. The Amplify CLI is used to bootstrap the Amplify hosting environment and deploy the code into the Amplify hosting environment.

- If you have not been authenticated, you will be authenticated against Amazon Cognito using the Amplify React UI library.

- When you provide an input and submit the form, the request is processed via API Gateway.

- Lambda functions sanitize the user input and invoke the respective SageMaker endpoints. Lambda functions also construct the prompts from the sanitized user input in the respective format expected by the LLM. These Lambda functions also reformat the output from the LLMs and send the response back to the user.

- SageMaker endpoints are deployed for text-to-text (Flan T5 XXL), text-to-embeddings (GPTJ-6B), and text-to-image models (Stability AI). Three separate endpoints using the recommended default SageMaker instance types are deployed.

- Embeddings for documents are generated using the text-to-embeddings model and these embeddings are indexed into OpenSearch Service. A k-Nearest Neighbor (k-NN) index is enabled to allow searching of embeddings from the OpenSearch Service.

- An AWS Fargate job takes documents and segments them into smaller packages, invokes the text-to-embeddings LLM model, and indexes the returned embeddings into OpenSearch Service for searching context as described previously.

Dataset overview

The dataset used for this solution is pile-of-law within the Hugging Face repository. This dataset is a large corpus of legal and administrative data. For this example, we use train.cc_casebooks.jsonl.xz within this repository. This is a collection of education casebooks curated in a JSONL format as required by the LLMs.

Prerequisites

Before getting started, make sure you have the following prerequisites:

- An AWS account.

- An Amazon SageMaker Studio domain managed policy attached to the AWS Identity and Access Management (IAM) execution role. For instructions on assigning permissions to the role, refer to Amazon SageMaker API Permissions: Actions, Permissions, and Resources Reference. In this case, you need to assign permissions as allocated to Amazon Augmented AI (Amazon A2I). For more information, refer to Amazon SageMaker Identity-Based Policy Examples.

- An Amazon Simple Storage Service (Amazon S3) bucket. For instructions, refer to Creating a bucket.

- For this post, you use the AWS Cloud Development Kit (AWS CDK) using Python. Follow the instructions in Getting Started with the AWS CDK to set up your local environment and bootstrap your development account.

- This AWS CDK project requires SageMaker instances (two ml.g5.2xlarge and one ml.p3.2xlarge). You may need to request a quota increase.

Implement the solution

An AWS CDK project that includes all the architectural components has been made available in this AWS Samples GitHub repository. To implement this solution, do the following:

- Clone the GitHub repository to your computer.

- Go to the root folder.

- Initialize the Python virtual environment.

- Install the required dependencies specified in the

requirements.txtfile. - Initialize AWS CDK in the project folder.

- Bootstrap AWS CDK in the project folder.

- Using the AWS CDK deploy command, deploy the stacks.

- Go to the Amplify folder within the project folder.

- Initialize Amplify and accept the defaults provided by the CLI.

- Add Amplify hosting.

- Publish the Amplify front end from within the Amplify folder and note the domain name provided at the end of run.

- On the Amazon Cognito console, add a user to the Amazon Cognito instance that was provisioned with the deployment.

- Go to the domain name from step 11 and provide the Amazon Cognito login details to access the application.

Trigger an OpenSearch indexing job

The AWS CDK project deployed a Lambda function named GenAIServiceTxt2EmbeddingsOSIndexingLambda. Navigate to this function on the Lambda console.

Run a test with an empty payload, as shown in the following screenshot.

This Lambda function triggers a Fargate task on Amazon Elastic Container Service (Amazon ECS) running within the VPC. This Fargate task takes the included JSONL file to segment and create an embeddings index. Each segments embedding is a result of invoking the text-to-embeddings LLM endpoint deployed as part of the AWS CDK project.

Clean up

To avoid future charges, delete the SageMaker endpoint and stop all Lambda functions. Also, delete the output data in Amazon S3 you created while running the application workflow. You must delete the data in the S3 buckets before you can delete the buckets.

Conclusion

In this post, we demonstrated an end-to-end approach to create a secure enterprise application using Generative AI and RAG. This approach can be used in building secure and scalable Generative AI applications on AWS. We encourage you to deploy the AWS CDK app into your account and build the Generative AI solution.

Additional resources

For more information about Generative AI applications on AWS, refer to the following:

- Accelerate your learning towards AWS Certification exams with automated quiz generation using Amazon SageMaker foundations models

- Build a powerful question answering bot with Amazon SageMaker, Amazon OpenSearch Service, Streamlit, and LangChain

- Deploy generative AI models from Amazon SageMaker JumpStart using the AWS CDK

- Get started with generative AI on AWS using Amazon SageMaker JumpStart

- Build a serverless meeting summarization backend with large language models on Amazon SageMaker JumpStart

About the Authors

Jay Pillai is a Principal Solutions Architect at Amazon Web Services. As an Information Technology Leader, Jay specializes in artificial intelligence, data integration, business intelligence, and user interface domains. He holds 23 years of extensive experience working with several clients across real estate, financial services, insurance, payments, and market research business domains.

Jay Pillai is a Principal Solutions Architect at Amazon Web Services. As an Information Technology Leader, Jay specializes in artificial intelligence, data integration, business intelligence, and user interface domains. He holds 23 years of extensive experience working with several clients across real estate, financial services, insurance, payments, and market research business domains.

Shikhar Kwatra is an AI/ML Specialist Solutions Architect at Amazon Web Services, working with a leading Global System Integrator. He has earned the title of one of the Youngest Indian Master Inventors with over 500 patents in the AI/ML and IoT domains. Shikhar aids in architecting, building, and maintaining cost-efficient, scalable cloud environments for the organization, and supports the GSI partner in building strategic industry solutions on AWS. Shikhar enjoys playing guitar, composing music, and practicing mindfulness in his spare time.

Shikhar Kwatra is an AI/ML Specialist Solutions Architect at Amazon Web Services, working with a leading Global System Integrator. He has earned the title of one of the Youngest Indian Master Inventors with over 500 patents in the AI/ML and IoT domains. Shikhar aids in architecting, building, and maintaining cost-efficient, scalable cloud environments for the organization, and supports the GSI partner in building strategic industry solutions on AWS. Shikhar enjoys playing guitar, composing music, and practicing mindfulness in his spare time.

Karthik Sonti leads a global team of solution architects focused on conceptualizing, building and launching horizontal, functional and vertical solutions with Accenture to help our joint customers transform their business in a differentiated manner on AWS.

Karthik Sonti leads a global team of solution architects focused on conceptualizing, building and launching horizontal, functional and vertical solutions with Accenture to help our joint customers transform their business in a differentiated manner on AWS.