Streamline access to ISO-rating content changes with Verisk rating insights and Amazon Bedrock

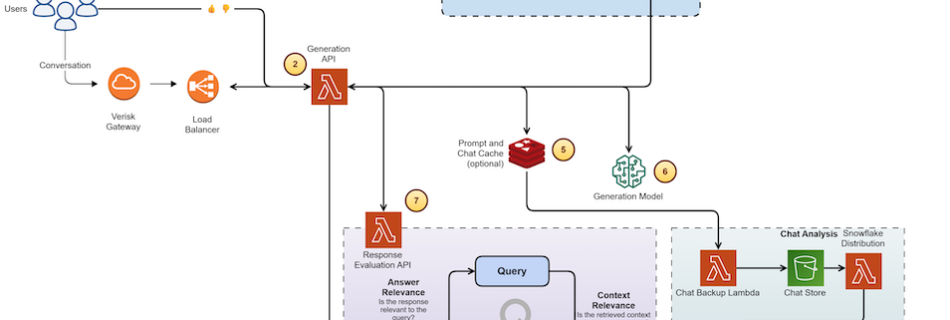

This post is co-written with Samit Verma, Eusha Rizvi, Manmeet Singh, Troy Smith, and Corey Finley from Verisk. Verisk Rating Insights as a feature of ISO Electronic Rating Content (ERC) is a powerful tool designed to provide summaries of ISO Rating changes between two releases. Traditionally, extracting specific filing information or identifying differences across multiple …