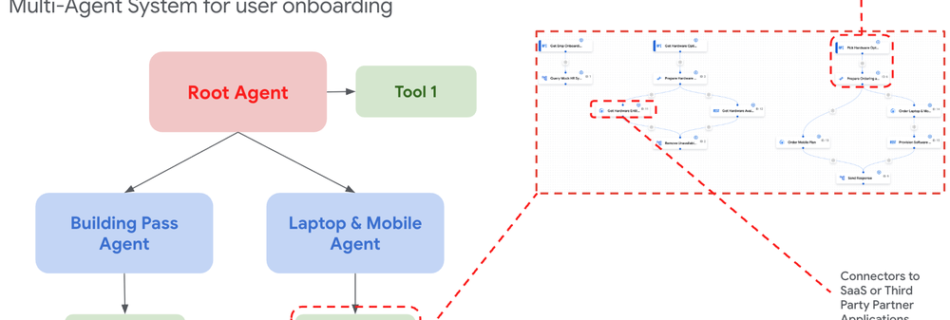

Build intelligent employee onboarding with Gemini Enterprise

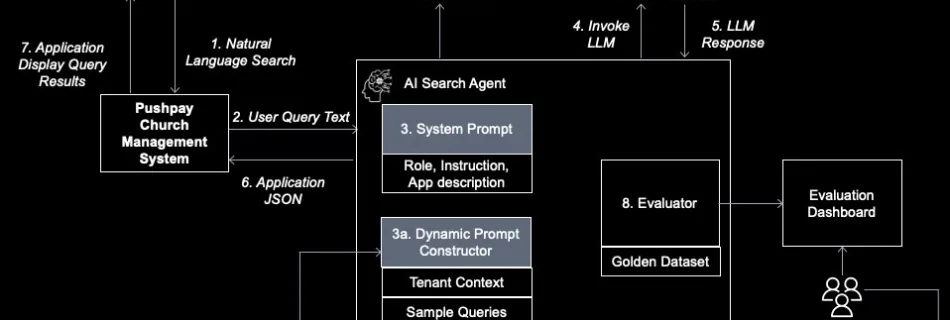

Employee onboarding is rarely a linear process. It’s a complex web of dependencies that vary significantly based on an individual’s specific profile. For example, even a simple request for a laptop requires the system to cross-reference the employee’s role, function, and seniority level to determine whether they need a high-powered workstation or a standard mobile …

Read more “Build intelligent employee onboarding with Gemini Enterprise”