Getting started with deploying real-time models on Amazon SageMaker

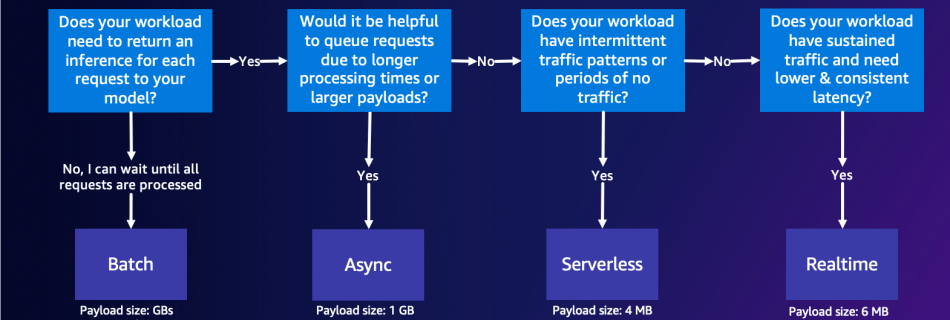

Amazon SageMaker is a fully-managed service that provides every developer and data scientist with the ability to quickly build, train, and deploy machine learning (ML) models at scale. ML is realized in inference. SageMaker offers four Inference options: Real-Time Inference Serverless Inference Asynchronous Inference Batch Transform These four options can be broadly classified into Online …

Read more “Getting started with deploying real-time models on Amazon SageMaker”