Enterprise-grade natural language to SQL generation using LLMs: Balancing accuracy, latency, and scale

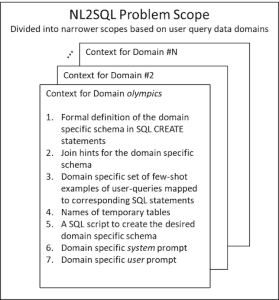

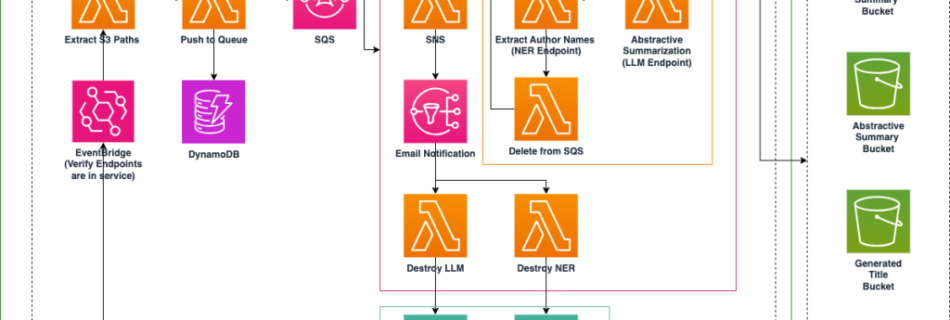

This blog post is co-written with Renuka Kumar and Thomas Matthew from Cisco. Enterprise data by its very nature spans diverse data domains, such as security, finance, product, and HR. Data across these domains is often maintained across disparate data environments (such as Amazon Aurora, Oracle, and Teradata), with each managing hundreds or perhaps thousands …