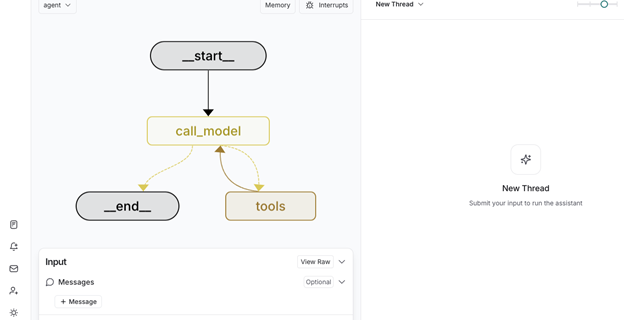

Build multi-agent systems with LangGraph and Amazon Bedrock

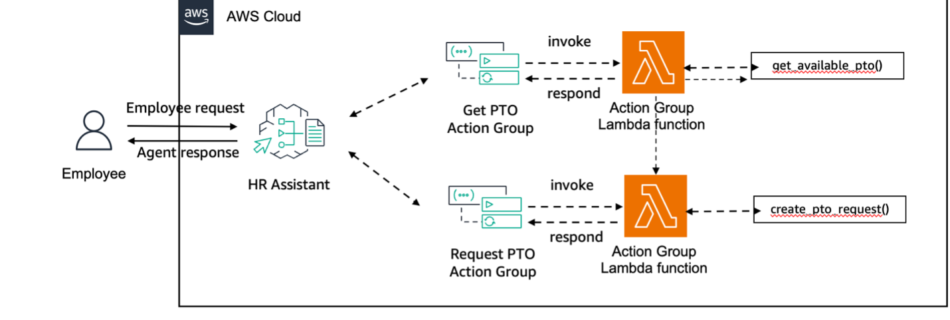

Large language models (LLMs) have raised the bar for human-computer interaction where the expectation from users is that they can communicate with their applications through natural language. Beyond simple language understanding, real-world applications require managing complex workflows, connecting to external data, and coordinating multiple AI capabilities. Imagine scheduling a doctor’s appointment where an AI agent …

Read more “Build multi-agent systems with LangGraph and Amazon Bedrock”