How to run LLaMA-13B or OpenChat-8192 on a Single GPU — Pragnakalp Techlabs: AI, NLP, Chatbot, Python Development

Recently, numerous open-source large language models (LLMs) have been launched. These powerful models hold great potential for a wide range of applications. However, one major challenge that arises is the limitation of resources when it comes to testing these models. While platforms like Google Colab Pro offer the ability to test up to 7B models, what options do we have when we wish to experiment with even larger models, such as 13B?

In this blog post, we will see how can we run Llama 13b and openchat 13b models on a single GPU. Here we are using Google Colab Pro’s GPU which is T4 with 25 GB of system RAM. Let’s check how to run it step by step.

Step 1:

Install the requirements, you need to install the accelerate and transformers from the source and make sure you have installed the latest version of bitsandbytes library (0.39.0).

!pip install -q -U bitsandbytes !pip install -q -U git+https://github.com/huggingface/transformers.git !pip install -q -U git+https://github.com/huggingface/peft.git !pip install -q -U git+https://github.com/huggingface/accelerate.git !pip install sentencepiec

Step 2:

We are using the quantization technique in our approach, employing the BitsAndBytes functionality from the transformers library. This technique allows us to perform quantization using various 4-bit variants, such as NF4 (normalized float 4, which is the default) or pure FP4 quantization. With 4-bit bitsandbytes, weights are stored in 4 bits, while the computation can still occur in 16 or 32 bits. Different combinations, including float16, bfloat16, and float32, can be chosen for computation.

To enhance the efficiency of matrix multiplication and training, we recommend utilizing a 16-bit compute dtype, with the default being torch.float32. The recent introduction of the BitsAndBytesConfig in transformers provides the flexibility to modify these parameters according to specific requirements.

import torch from transformers import BitsAndBytesConfig from transformers import AutoModelForCausalLM, AutoTokenizer quantization_config = BitsAndBytesConfig( load_in_4bit=True, bnb_4bit_compute_dtype=torch.bfloat16 )

Step 3:

Once we have added the configuration, now in this step we will load the tokenizer and the model, Here we are using Openchat model, you can use any 13b model available on HuggingFace Model.

If you want to use Llama 13 model, then just change the model-id to “openlm-research/open_llama_13b” and again run the steps below

model_id = "openchat/openchat_8192" tokenizer = AutoTokenizer.from_pretrained(model_id) model_bf16 = AutoModelForCausalLM.from_pretrained(model_id, quantization_config=quantization_config)

Step 4:

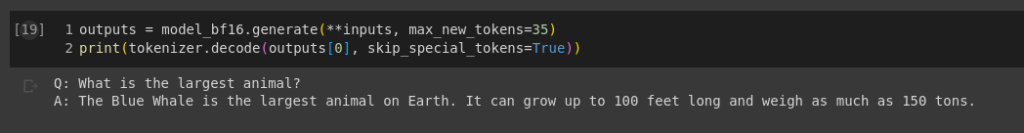

Once we have loaded the model, it is time to test it. You can provide any input of your choice, and also increase the “max_new_tokens” parameter to the number of tokens you would like to generate.

text = "Q: What is the largest animal?nA:" device = "cuda:0" inputs = tokenizer(text, return_tensors="pt").to(device) outputs = model_bf16.generate(**inputs, max_new_tokens=35) print(tokenizer.decode(outputs[0], skip_special_tokens=True))

Output:

You can use any 13b model using this quantization technique using a single GPU or Google Colab Pro.

Originally published at How To Run LLaMA-13B Or OpenChat-8192 On A Single GPU on July 14, 2023.

How to run LLaMA-13B or OpenChat-8192 on a Single GPU — Pragnakalp Techlabs: AI, NLP, Chatbot… was originally published in Chatbots Life on Medium, where people are continuing the conversation by highlighting and responding to this story.