Palantir Foundry for AI Governance

Ethical AI in Action

Editor’s Note: Written by Palantir’s Privacy and Civil Liberties (PCL) team, this blog post builds on our belief that, in order to preserve the truly valuable contributions of AI/ML, AI ethics and efficacy must move beyond the performative towards operational realities. In future posts, we’ll be addressing other pressing themes and challenges in the space of AI governance, including rapid developments with and deployment of generative AI broadly, and Large Language Models (LLMs) specifically.

Organizations leverage AI within Palantir Foundry to advance and accelerate work in practical ways — saving time on repeatable operational actions and allowing their subject matter experts to focus on analysis and decision making. At the same time, AI safety, reliability, explainability, and governance are foundational to the success of these applications. These central capabilities are reflective of Palantir’s broader commitment to building technologies that enable truly effective and ethically responsible outcomes in the most important settings, from civil government and defense, to health and commercial.

In this blog post, we demonstrate ML operationalization in action through two case studies that explore how Palantir Foundry AI-enabled and -assisted applications are leveraged in finance and life science. First, we begin with an overview of the modeling lifecycle.

A Complete Modeling Lifecycle

Responsible and operationalized AI/ML development requires a holistic modeling process that is comprehensive, governable, and responsive to operational feedback from subject matter experts who are empowered to translate ML outputs into real actions.

Palantir Foundry enables these capabilities through a full modeling lifecycle (see Figure 1), including: data integration, feature generation for development and production, model training and configuration, evaluation, maintenance, deployment, governance, operationalization, and feedback gathering.

Collectively, these capabilities provide a complete framework for the trustworthy and secure deployment and use of models in live operational settings, with deep connectivity to subject matters experts.

Palantir’s approach enables operational AI/ML governance at the core of every stage of model development. Highlighted in the case studies that follow, these capabilities include:

- Transparency around risk thresholds: Users can set the thresholds for routing decisions; for example, a hypothetical model risk score of 0.9+ is sent automatically for final approval, but under 0.9 is sent to an analyst for investigation.

- Model calibration: Users can review historic risk scores and see if the risk estimates are accurate, or if they need to be adjusted to different margins.

- Metadata: Users can easily review and edit definitions of inputs, outputs, artifacts, and execution types in a centralised location.

- Full auditing of model decisions: A persistent and reliable audit trail is provided for all data processing steps for later analysis, troubleshooting, oversight, and accountability.

- Versioning: Tracking of all changes to data, models, parameters, and conditions, creating transparency around which models and data were used for which decisions.

- Human-in-the-loop gates: Critical decisions require human input. This allows for important context grounding, while also reducing repetitive, manual data collection and decision-making resources.

- Access controls: Firm permissions can be set around who can access the applications and data, what types of data are visible to which roles, and other intentional data minimization practices. This includes purpose-based, discretionary, and mandatory access controls.

- Data protection controls: Zero-trust data protection controls natively allow security officers to define and manage network connectivity and other security elements. This allows for total control of where data may be sent inside and outside of the platform.

- Model management gates: Users have the ability to create teams and roles with the responsibility to validate and approve models, as well as list out considerations by individual data subsets.

The following case studies demonstrate how ethically responsible and trustworthy AI can be brought to bear on important private and public sector applications.

Case Study 1: Transforming the approach to financial crime

Financial institutions around the world have seen a steady growth in the volume of money laundering and other financial crimes — and the strategies used to evade their detection are becoming increasingly sophisticated. This presents a challenge for global financial institutions in the Anti-Money Laundering (AML) environment, requiring them to navigate complex large-scale financial crimes while also meeting numerous regulatory requirements.

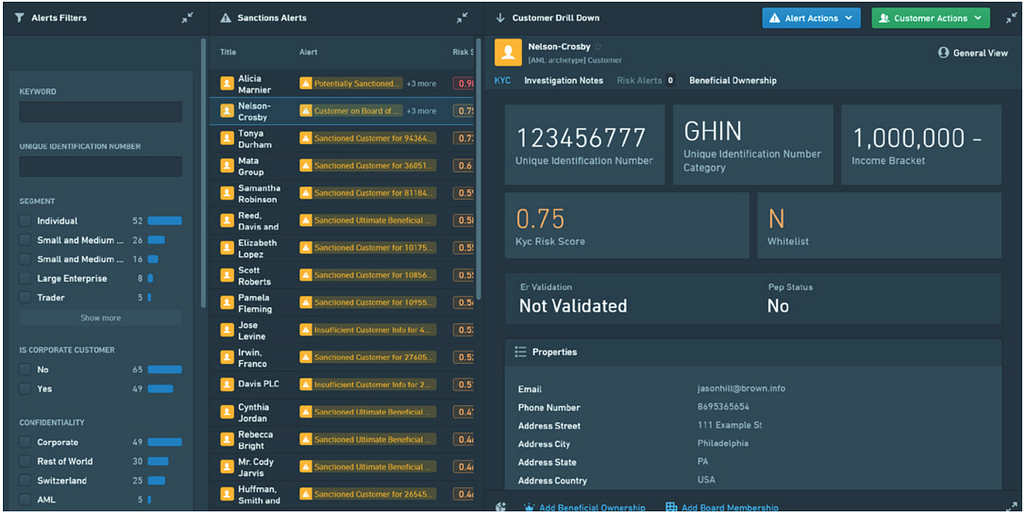

Detection efforts require banks to screen potential customers against Potentially Exposed Persons (PEP) and Sanctions Lists, a process referred to as Know Your Customer (KYC). The ultimate goal of this screening is to ensure that banks do not directly, or indirectly, provide funding infrastructure for terrorism, embargoed entities, or other groups on international sanctions lists.

Historically, analysts have needed to devote significant time to manual searches of potential customers, often combing through massive troves of disparate data to gather potential signals, to ensure they adhere to bank-specific requirements and do not have ties to entities on global common sanctions lists. These costly manual searches often mean there is less time for in-depth analysis, resulting in lower accuracy and more frequent regulatory fines.

These legacy processes, compounded by stringent global regulatory requirements and the incorporation of the highest standards of security represent an ideal use case for AI/ML augmentation within Palantir Foundry.

Applying an AML Enhanced Sanctions Screening Model

The ML-powered process begins with leveraging Palantir’s Enhanced Sanctions Screening module (Figure 2). The module can be easily integrated with legacy systems used to carry out myriad day-to-day bank operations. Institutions start by linking their data, ranging from relevant governments lists (OFAC, UNSCR, etc.), prohibited global entities, their required potential customer data, and any other relevant sources. Then, using context-specific configurations, users set risk thresholds within the matching model. Specified thresholds are then mapped to automated or manual decision-making workflows and determine escalation paths with prioritization logic. Required data from the prospective customer is cleaned, run through entity matching and match aggregation, and given a risk score from the model.

Governance is a core component of the Enhanced Sanctions Screening workflow (Figure 3). For instance, the “Human Validation” stage prompts analysts to validate the score of the model by confirming or rejecting final decisions, creating a key feedback loop that can support model updates and re-training.

By leveraging our Enhanced Sanctions Screening module with ML phrase matching, customers have been able to automate 85% of the KYC process and reduce onboarding time by ten-fold, all while maximising governance. In the process, organisations also obtain a centralised, secure profile on those same customers for long-term use, including KYC decisions and justifications.

At multiple institutions, analysts can now complete investigations 80% faster with over 20% higher accuracy as compared with legacy manual reviews — empowering an improved customer experience, consolidated knowledge, and significantly reduced risk of incorrect decisions and associated fines. For more, see the full case study here.

Case Study 2: Improving patient care

Predicting survival rates in cancer patients depends on a number of different factors such as the age and underlying health of a person, the sub-type of cancer, or particular treatment plans. It is a complex process that relies on clinical expertise and, increasingly, the incorporation of statistical models. Models that are able to more accurately predict survival rates based on the data presented, as well as combine feedback from subject matter experts in real-time settings, helps clinicians in managing their patients more holistically with next best treatment options.

In medicine, however, ML models are usually built and trained in separate environments to those in which they are deployed. This traditional approach often leads to issues such as model drift, lack of integration of feedback, and lack of clarity on governance of the models.

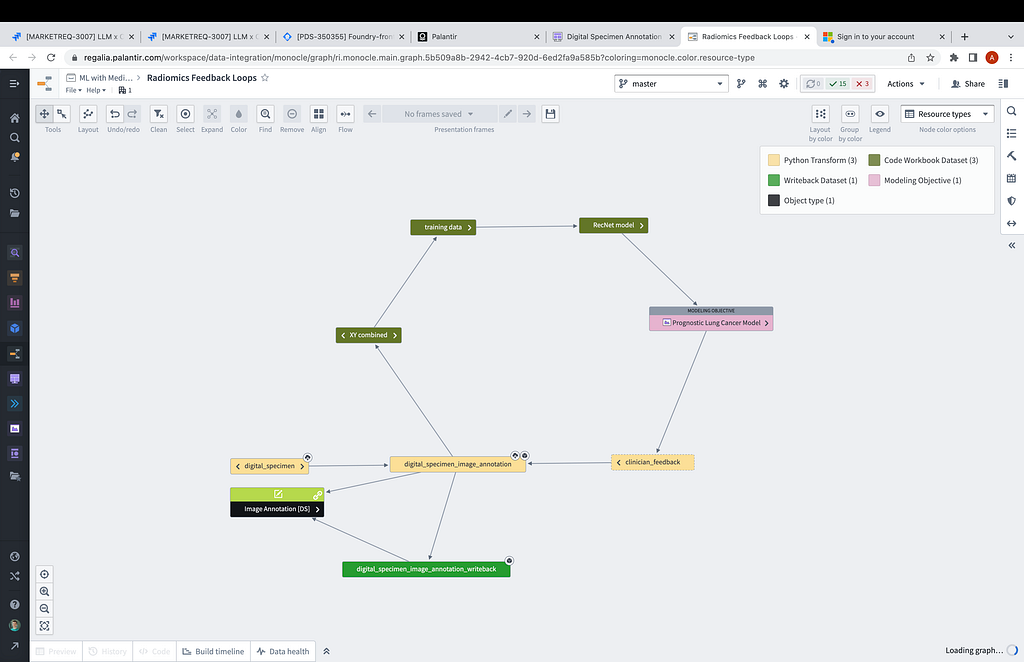

Palantir Foundry is suited to meet these challenges as models are built (or incorporated) directly into the platform and operational workflow of a clinician, enabling a feedback mechanism that accelerates the process of model retraining and redeployment, as well as provides an auditable AI governance pathway.

Accelerating model retraining and redeployment

In practice, clinicians can use Workshop — a point-and-click application building tool within Foundry — to select an area of the lung image that looks important to them and pass that to the model for preliminary prognosis (see Figure 4). Based on the model response, as well as their own knowledge, clinicians gain a more accurate understanding of next diagnostic or treatment options for their patients.

The platform’s foundational governance empowers clinicians with ongoing oversight. If the model output is not as expected, clinicians can flag it for review by the model owner, allowing data science teams to understand when and why their models are not performing as expected. Even more significantly, clinicians can label model outputs as incorrect. This action writes data back to an annotated labels dataset, which can then feed into the model training data for subsequent model retraining. Once retrained and redeployed, the latest model version is also rigorously documented in an auditable way, as illustrated in Figure 1. This process results in a clear record of the full history model versioning — including edits made and feedback given — to ensure that there is continuous performance improvement and quick onboarding of new modelers.

The entire process is auditable and provides transparency on inputs and changes within model development and deployment, helping to ensure that as models continuously adapt there is clarity on the AI governance pathway. Facilitated by Foundry, the real-life feedback continuously accrues over time, and the process of retraining and redeploying a model can be accelerated from months to days.

These capabilities help clinicians more effectively identify pathologies and, ultimately, better manage their patients with lung cancer. At the same time, a rigorous data governance framework enables model building and feedback to become an integral part of everyday processes and helps clinicians encode their knowledge into a scalable asset.

Closing Thoughts

As the above use cases have demonstrated, organizations leverage AI within Palantir Foundry not only to advance their business in practical ways, but to deliver on ethical obligations, making use of scalable governance and ensuring a human is in the loop for validation and responsibility in decision-making.

By incorporating critical model governance processes into core business operations and enabling teams to work collaboratively and securely on their most significant practical challenges, organizations can better operationalize value-additive AI.

The field of artificial intelligence will benefit from those who focus on real solutions tied to complex operational settings. At Palantir, we are committed to developing practical applications that solve complex problems today.

Visit our Artificial Intelligence and Machine Learning webpage to learn about AI/ML in Foundry and new improvements to our model management offering.

Authors

Michael Adams, Privacy & Civil Liberties Engineer, Palantir UK

Dr Indra Joshi, Director Health, Research & AI, Palantir Technologies

Palantir Foundry for AI Governance: Ethical AI in action was originally published in Palantir Blog on Medium, where people are continuing the conversation by highlighting and responding to this story.