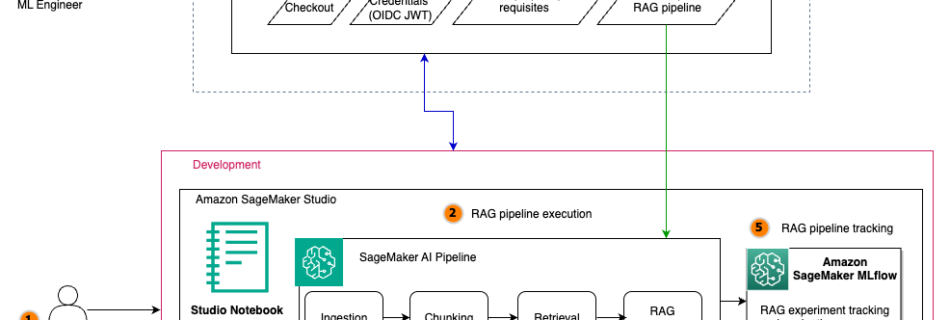

Automate advanced agentic RAG pipeline with Amazon SageMaker AI

Retrieval Augmented Generation (RAG) is a fundamental approach for building advanced generative AI applications that connect large language models (LLMs) to enterprise knowledge. However, crafting a reliable RAG pipeline is rarely a one-shot process. Teams often need to test dozens of configurations (varying chunking strategies, embedding models, retrieval techniques, and prompt designs) before arriving at …

Read more “Automate advanced agentic RAG pipeline with Amazon SageMaker AI”