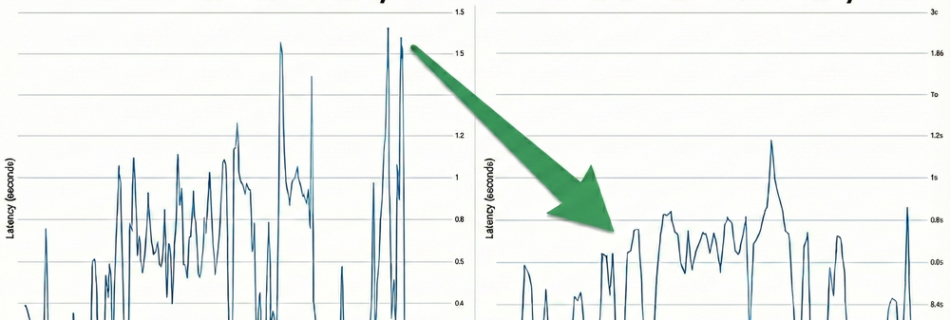

How we cut Vertex AI latency by 35% with GKE Inference Gateway

As generative AI moves from experimentation to production, platform engineers face a universal challenge for inference serving: you need low latency, high throughput, and manageable costs. It is a difficult balance. Traffic patterns vary wildly, from complex coding tasks that require processing huge amounts of data, to quick, chatty conversations that demand instant replies. Standard …

Read more “How we cut Vertex AI latency by 35% with GKE Inference Gateway”