Despite AI’s potential to drive competitive advantages, realizing its business value remains a challenge. Organizations are still struggling to move AI projects beyond experimentation, with some estimates in the last few years indicating that more than half of machine learning (ML) pilots fail to make it to production.

ML systems are full of hidden technical debt. ML code represents only a small fraction of the infrastructure, components, and processes that are required to realize real-world ML systems. Today’s ML teams need streamlined, efficient workflows to enhance their ML operations if they hope to meet the increasing demands of AI-driven industries.

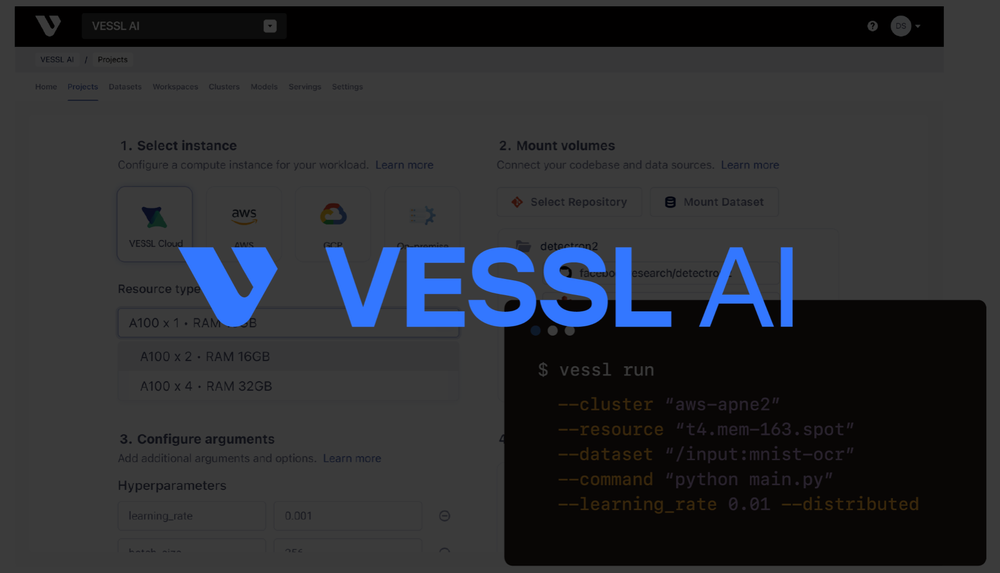

At VESSL AI, we’re on a mission to help organizations scale and accelerate their AI efforts with comprehensive tools, fully managed infrastructure, and a cutting-edge end-to-end MLOps platform built to help bridge the gap between proof-of-concept and production.

Google Cloud: The catalyst in VESSL AI’s journey

From the start, we recognized the transformative potential of cloud computing and services to help us enhance our MLOps platform — and we chose Google Cloud to help us do it.

Google Cloud’s vast resources enable us to deploy solutions seamlessly, ensuring our customers can operate without interruptions and scale as needed. By leveraging Google Cloud services, such as Compute Engine and Google Kubernetes Engine (GKE), we provide an infrastructure that scales as per model requirements, ensuring that customers can utilize resources optimally. Google Cloud’s extensive network and infrastructure also allows us to deliver our solutions globally, catering to enterprises across continents.

For example, many generative AI startups, including ours, faced the challenge of building a robust infrastructure capable of rapidly sourcing a range of GPUs for running large language models (LLMs) and generative AI models. In response, we created VESSL Run, a unified interface designed for executing all types of ML workloads across various GPU configurations. Using GKE, we constructed robust computing clusters that allow us to dynamically scale ML workloads on Kubernetes. This setup enables us to manage the entire ML lifecycle from training to deployment. In addition, Spot GPUs ensures our platform can achieve optimal computational efficiency, significantly reducing costs without impacting performance.

Data security is also paramount for AI teams and enterprises, as data is their core business asset. Google Cloud’s rigorous security and compliance protocols allows us to confidently assure our users that we meet the highest standards and requirements. VESSL AI Artifacts, for instance, leverages Google Cloud’s fine-grained access control to securely store datasets, models, and other crucial artifacts. All of our ML datasets and models live in Cloud Storage, and we use Filestore for NFS to ensure teams can always access their data and workloads. As a result, we are not only able to ensure data is accessible throughout the entire ML lifecycle — it’s also accurate, consistent, safe, and secure.

Tapping into (even more) powerful AI

More recently, we have added integration with Vertex AI to enhance our capabilities significantly. With Vertex AI, we can now complement our existing MLOps components, allowing users to not only label datasets and train models with minimal manual intervention and expertise but also utilize powerful models and AutoML solutions provided by Google. This integration represents a significant advancement in our ability to offer our clients a more comprehensive and efficient service, addressing the evolving needs of AI model development. With Vertex AI, we can now complement our existing MLOps components, allowing users to label datasets and train models with minimal manual intervention and expertise, resulting in even more streamlined ML workflows.

Many organizations are already experiencing transformative benefits that save them time and money by tapping into the power of our platform. With Google Cloud, VESSL AI has helped users craft AI models up to four times faster, saving teams hundreds of hours in time to go from ideation to deployment. In addition, and made it possible for them to realize up to 80% savings on cloud expenditure.

Moreover, the ability to work closely with Google Cloud’s team of experts has helped us achieve a deep understanding of the best that Google Cloud has to offer and create a synergistic relationship that benefits our customers.

Looking ahead

While VESSL AI has already made significant strides in the MLOps landscape, our journey has just begun. With strong enterprise success stories and the release of our public beta in 2023, we’re now gearing up for a future where ML teams can integrate multiple third-party cloud platforms and scale their ML workflows seamlessly from training to model deployment.

Furthermore, our participation in the Google for Startups Accelerator program offers us an unparalleled advantage to expand our horizons. This program provides startups with access to a wealth of resources, mentorship, and potential funding opportunities. It acts as a catalyst to help startups scale rapidly, tap into global markets, and refine their offerings — opening doors to work with other industry leaders and a robust ecosystem in which to thrive.

The fusion of AI and operations is revolutionizing industries. We believe our collaboration with Google Cloud will keep us at the forefront of this transformation and hopefully set an example for other startups looking to accelerate growth with the cloud in the new generative AI landscape. Partnering with Google Cloud not only provides innovators with cutting-edge technology but also positions them in a network that can significantly amplify their business research and potential.

For startups looking to explore how Google Cloud can elevate their operations, visit the Google for Startups Program page for more details about the program and its benefits, including up to $350,000 in Google Cloud credits and technical support to get you up and running.