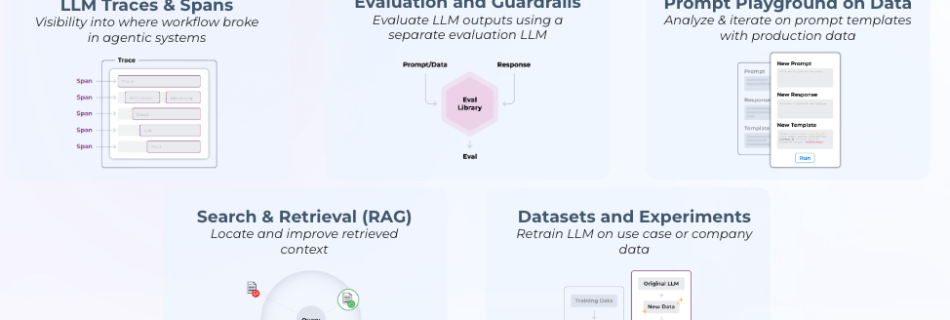

Arize, Vertex AI API: Evaluation workflows to accelerate generative app development and AI ROI

In the rapidly evolving landscape of artificial intelligence, enterprise AI engineering teams must constantly seek cutting-edge solutions to drive innovation, enhance productivity, and maintain a competitive edge. In leveraging an AI observability and evaluation platform like Arize AI with the advanced capabilities of Google’s suite of AI tools, enterprises looking to push the boundaries of …