How to use advance feature engineering to preprocess data in BigQuery ML

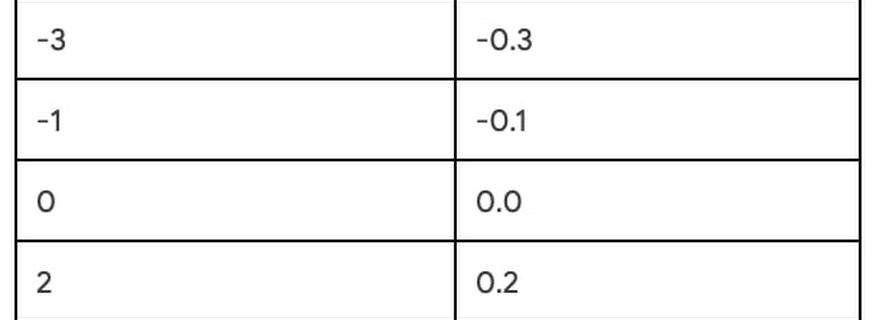

Preprocessing and transforming raw data into features is a critical but time consuming step in the ML process. This is especially true when a data scientist or data engineer has to move data across different platforms to do MLOps. In this blogpost, we describe how we streamline this process by adding two feature engineering capabilities …

Read more “How to use advance feature engineering to preprocess data in BigQuery ML”